Replace #32001.

To prevent the context cache from being misused for long-term work

(which would result in using invalid cache without awareness), the

context cache is designed to exist for a maximum of 10 seconds. This

leads to many false reports, especially in the case of slow SQL.

This PR increases it to 5 minutes to reduce false reports.

5 minutes is not a very safe value, as a lot of changes may have

occurred within that time frame. However, as far as I know, there has

not been a case of misuse of context cache discovered so far, so I think

5 minutes should be OK.

Please note that after this PR, if warning logs are found again, it

should get attention, at that time it can be almost 100% certain that it

is a misuse.

(cherry picked from commit a323a82ec4bde6ae39b97200439829bf67c0d31e)

(cherry picked from commit a5818470fe62677d8859b590b2d80b98fe23d098)

Signed-off-by: Gergely Nagy <forgejo@gergo.csillger.hu>

Conflicts:

- .github/ISSUE_TEMPLATE/bug-report.yaml

.github/ISSUE_TEMPLATE/config.yml

.github/ISSUE_TEMPLATE/feature-request.yaml

.github/ISSUE_TEMPLATE/ui.bug-report.yaml

templates/install.tmpl

All of these are Gitea-specific. Resolved the conflict by not

picking their change.

- Currently for the `nosql` module (which simply said provides a manager

for redis clients) returns the

[`redis.UniversalClient`](https://pkg.go.dev/github.com/redis/go-redis/v9#UniversalClient)

interface. The interfaces exposes all available commands.

- In generalm, dead code elimination should be able to take care of not

generating the machine code for methods that aren't being used. However

in this specific case, dead code elimination either is disabled or gives

up on trying because of exhaustive call stack the client by

`GetRedisClient` is used.

- Help the Go compiler by explicitly specifying which methods we use.

This reduces the binary size by ~400KB (397312 bytes). As Go no longer

generate machine code for commands that aren't being used.

- There's a **CAVEAT** with this, if a developer wants to use a new

method that isn't specified, they will have to know about this

hack (by following the definition of existing Redis methods) and add the

method definition from the Redis library to the `RedisClient` interface.

- This is in the spirit of #5090.

- Move to a fork of gitea.com/go-chi/cache,

code.forgejo.org/go-chi/cache. It removes unused code (a lot of

adapters, that can't be used by Forgejo) and unused dependencies (see

go.sum). Also updates existing dependencies.

8c64f1a362..main

- retrieved by the commit hash

- removes bindata tags from integration tests, because it does not seem

to be required

- due to the missing automatically generated data, the zstd tests fail

(they use repo data including node_modules (!) as input to the test,

there is no apparent reason for the size constants)

This adds a new configuration setting: `[quota.default].TOTAL`, which

will be used if no groups are configured for a particular user. The new

option makes it possible to entirely skip configuring quotas via the API

if all that one wants is a total size.

Signed-off-by: Gergely Nagy <forgejo@gergo.csillger.hu>

- Follow up of #4819

- When no `ssh` executable is present, disable the UI and backend bits

that allow the creation of push mirrors that use SSH authentication. As

this feature requires the usage of the `ssh` binary.

- Integration test added.

When opening a repository, it will call `ensureValidRepository` and also

`CatFileBatch`. But sometimes these will not be used until repository

closed. So it's a waste of CPU to invoke 3 times git command for every

open repository.

This PR removed all of these from `OpenRepository` but only kept

checking whether the folder exists. When a batch is necessary, the

necessary functions will be invoked.

---

Conflict resolution: Because of the removal of go-git in (#4941)

`_nogogit.go` files were either renamed or merged into the 'common'

file. Git does handle the renames correctly, but for those that were

merged has to be manually copied pasted over. The patch looks the same,

201 additions 90 deletions as the original patch.

(cherry picked from commit c03baab678ba5b2e9d974aea147e660417f5d3f7)

- Moves to a fork of gitea.com/go-chi/session that removed support for

couchbase (and ledis, but that was never made available in Forgejo)

along with other code improvements.

f8ce677595..main

- The rationale for removing Couchbase is quite simple. Its not licensed

under FOSS

license (https://www.couchbase.com/blog/couchbase-adopts-bsl-license/)

and therefore cannot be tested by Forgejo and shouldn't be supported.

This is a similair vein to the removal of MSSQL

support (https://codeberg.org/forgejo/discussions/issues/122)

- A additional benefit is that this reduces the Forgejo binary by ~600Kb.

- Continuation of https://github.com/go-gitea/gitea/pull/18835 (by

@Gusted, so it's fine to change copyright holder to Forgejo).

- Add the option to use SSH for push mirrors, this would allow for the

deploy keys feature to be used and not require tokens to be used which

cannot be limited to a specific repository. The private key is stored

encrypted (via the `keying` module) on the database and NEVER given to

the user, to avoid accidental exposure and misuse.

- CAVEAT: This does require the `ssh` binary to be present, which may

not be available in containerized environments, this could be solved by

adding a SSH client into forgejo itself and use the forgejo binary as

SSH command, but should be done in another PR.

- CAVEAT: Mirroring of LFS content is not supported, this would require

the previous stated problem to be solved due to LFS authentication (an

attempt was made at forgejo/forgejo#2544).

- Integration test added.

- Resolves#4416

The keying modules tries to solve two problems, the lack of key

separation and the lack of AEAD being used for encryption. The currently

used `secrets` doesn't provide this and is hard to adjust to provide

this functionality.

For encryption, the additional data is now a parameter that can be used,

as the underlying primitive is an AEAD constructions. This allows for

context binding to happen and can be seen as defense-in-depth; it

ensures that if a value X is encrypted for context Y (e.g. ID=3,

Column="private_key") it will only decrypt if that context Y is also

given in the Decrypt function. This makes confused deputy attack harder

to exploit.[^1]

For key separation, HKDF is used to derives subkeys from some IKM, which

is the value of the `[service].SECRET_KEY` config setting. The context

for subkeys are hardcoded, any variable should be shuffled into the the

additional data parameter when encrypting.

[^1]: This is still possible, because the used AEAD construction is not

key-comitting. For Forgejo's current use-case this risk is negligible,

because the subkeys aren't known to a malicious user (which is required

for such attack), unless they also have access to the IKM (at which

point you can assume the whole system is compromised). See

https://scottarc.blog/2022/10/17/lucid-multi-key-deputies-require-commitment/

One method to set them all... or something like that.

The defaults for git-grep options were scattered over the run

function body. This change refactors them into a separate method.

The application of defaults is checked implicitly by existing

tests and linters, and the new approach makes it very easy

to inspect the desired defaults are set.

The forgejo/forgejo#2367 pull requests added rel="nofollow" on filters in the

menu, this commit adds it on the labels in the listing and a few other places.

We need to shorten the timeout to bound effectively for

computation size. This protects against "too big" repos.

This also protects to some extent against too long lines

if kept to very low values (basically so that grep cannot run out

of memory beforehand).

Docs-PR: forgejo/docs#812

Fix#31271.

When gogit is enabled, `IsObjectExist` calls

`repo.gogitRepo.ResolveRevision`, which is not correct. It's for

checking references not objects, it could work with commit hash since

it's both a valid reference and a commit object, but it doesn't work

with blob objects.

So it causes #31271 because it reports that all blob objects do not

exist.

(cherry picked from commit f4d3120f9d1de6a260a5e625b3ffa6b35a069e9b)

Conflicts:

trivial resolution because go-git support was dropped https://codeberg.org/forgejo/forgejo/pulls/4941

Support compression for Actions logs to save storage space and

bandwidth. Inspired by

https://github.com/go-gitea/gitea/issues/24256#issuecomment-1521153015

The biggest challenge is that the compression format should support

[seekable](https://github.com/facebook/zstd/blob/dev/contrib/seekable_format/zstd_seekable_compression_format.md).

So when users are viewing a part of the log lines, Gitea doesn't need to

download the whole compressed file and decompress it.

That means gzip cannot help here. And I did research, there aren't too

many choices, like bgzip and xz, but I think zstd is the most popular

one. It has an implementation in Golang with

[zstd](https://github.com/klauspost/compress/tree/master/zstd) and

[zstd-seekable-format-go](https://github.com/SaveTheRbtz/zstd-seekable-format-go),

and what is better is that it has good compatibility: a seekable format

zstd file can be read by a regular zstd reader.

This PR introduces a new package `zstd` to combine and wrap the two

packages, to provide a unified and easy-to-use API.

And a new setting `LOG_COMPRESSION` is added to the config, although I

don't see any reason why not to use compression, I think's it's a good

idea to keep the default with `none` to be consistent with old versions.

`LOG_COMPRESSION` takes effect for only new log files, it adds `.zst` as

an extension to the file name, so Gitea can determine if it needs

decompression according to the file name when reading. Old files will

keep the format since it's not worth converting them, as they will be

cleared after #31735.

<img width="541" alt="image"

src="https://github.com/user-attachments/assets/e9598764-a4e0-4b68-8c2b-f769265183c9">

(cherry picked from commit 33cc5837a655ad544b936d4d040ca36d74092588)

Conflicts:

assets/go-licenses.json

go.mod

go.sum

resolved with make tidy

If the assign the pull request review to a team, it did not show the

members of the team in the "requested_reviewers" field, so the field was

null. As a solution, I added the team members to the array.

fix#31764

(cherry picked from commit 94cca8846e7d62c8a295d70c8199d706dfa60e5c)

There is no reason to reject initial dashes in git-grep

expressions... other than the code not supporting it previously.

A new method is introduced to relax the security checks.

- When people click on the logout button, a event is sent to all

browser tabs (actually to a shared worker) to notify them of this

logout. This is done in a blocking fashion, to ensure every registered

channel (which realistically should be one for every user because of the

shared worker) for a user receives this message. While doing this, it

locks the mutex for the eventsource module.

- Codeberg is currently observing a deadlock that's caused by this

blocking behavior, a channel isn't receiving the logout event. We

currently don't have a good theory of why this is being caused. This in

turn is causing that the logout functionality is no longer working and

people no longer receive notifications, unless they refresh the page.

- This patchs makes this message non-blocking and thus making it

consistent with the other messages. We don't see a good reason why this

specific event needs to be blocking and the commit introducing it

doesn't offer a rationale either.

See https://codeberg.org/forgejo/discussions/issues/164 for the

rationale and discussion of this change.

Everything related to the `go-git` dependency is dropped (Only a single

instance is left in a test file to test for an XSS, it requires crafting

an commit that Git itself refuses to craft). `_gogit` files have

been removed entirely, `go:build: !gogit` is removed, `XXX_nogogit.go` files

either have been renamed or had their code being merged into the

`XXX.go` file.

It is a waste of resources to scan them looking for matches

because they are never returned back - they appear as empty

lines in the current format.

Notably, even if they were returned, it is unlikely that matching

in binary files makes sense when the goal is "code search".

Analogously to how it happens for MaxResultLimit.

The default of 20 is inspired by a well-known, commercial code

hosting platform.

Unbounded limits are risky because they expose Forgejo to a class

of DoS attacks where queries are crafted to take advantage of

missing bounds.

Previous arch package grouping was not well-suited for complex or multi-architecture environments. It now supports the following content:

- Support grouping by any path.

- New support for packages in `xz` format.

- Fix clean up rules

<!--start release-notes-assistant-->

## Draft release notes

<!--URL:https://codeberg.org/forgejo/forgejo-->

- Features

- [PR](https://codeberg.org/forgejo/forgejo/pulls/4903): <!--number 4903 --><!--line 0 --><!--description c3VwcG9ydCBncm91cGluZyBieSBhbnkgcGF0aCBmb3IgYXJjaCBwYWNrYWdl-->support grouping by any path for arch package<!--description-->

<!--end release-notes-assistant-->

Reviewed-on: https://codeberg.org/forgejo/forgejo/pulls/4903

Reviewed-by: Earl Warren <earl-warren@noreply.codeberg.org>

Co-authored-by: Exploding Dragon <explodingfkl@gmail.com>

Co-committed-by: Exploding Dragon <explodingfkl@gmail.com>

- Fix "WARNING: item list for enum is not a valid JSON array, using the

old deprecated format" messages from

https://github.com/go-swagger/go-swagger in the CI.

- `CheckOAuthAccessToken` returns both user ID and additional scopes

- `grantAdditionalScopes` returns AccessTokenScope ready string (grantScopes)

compiled from requested additional scopes by the client

- `userIDFromToken` sets returned grantScopes (if any) instead of default `all`

Provide a bit more journald integration. Specifically:

- support emission of printk-style log level prefixes, documented in [`sd-daemon`(3)](https://man7.org/linux/man-pages/man3/sd-daemon.3.html#DESCRIPTION), that allow journald to automatically annotate stderr log lines with their level;

- add a new "journaldflags" item that is supposed to be used in place of "stdflags" when under journald to reduce log clutter (i. e. strip date/time info to avoid duplication, and use log level prefixes instead of textual log levels);

- detect whether stderr and/or stdout are attached to journald by parsing `$JOURNAL_STREAM` environment variable and adjust console logger defaults accordingly.

<!--start release-notes-assistant-->

## Draft release notes

<!--URL:https://codeberg.org/forgejo/forgejo-->

- Features

- [PR](https://codeberg.org/forgejo/forgejo/pulls/2869): <!--number 2869 --><!--line 0 --><!--description bG9nOiBqb3VybmFsZCBpbnRlZ3JhdGlvbg==-->log: journald integration<!--description-->

<!--end release-notes-assistant-->

Reviewed-on: https://codeberg.org/forgejo/forgejo/pulls/2869

Reviewed-by: Earl Warren <earl-warren@noreply.codeberg.org>

Co-authored-by: Ivan Shapovalov <intelfx@intelfx.name>

Co-committed-by: Ivan Shapovalov <intelfx@intelfx.name>

- Fixes an XSS that was introduced in

https://codeberg.org/forgejo/forgejo/pulls/1433

- This XSS allows for `href`s in anchor elements to be set to a

`javascript:` uri in the repository description, which would upon

clicking (and not upon loading) the anchor element execute the specified

javascript in that uri.

- [`AllowStandardURLs`](https://pkg.go.dev/github.com/microcosm-cc/bluemonday#Policy.AllowStandardURLs) is now called for the repository description

policy, which ensures that URIs in anchor elements are `mailto:`,

`http://` or `https://` and thereby disallowing the `javascript:` URI.

It also now allows non-relative links and sets `rel="nofollow"` on

anchor elements.

- Unit test added.

Now that my colleague just posted a wonderful blog post https://blog.datalad.org/posts/forgejo-runner-podman-deployment/ on forgejo runner, some time I will try to add that damn codespell action to work on CI here ;) meanwhile some typos managed to sneak in and this PR should address them (one change might be functional in a test -- not sure if would cause a fail or not)

### Release notes

- [ ] I do not want this change to show in the release notes.

- [ ] I want the title to show in the release notes with a link to this pull request.

- [ ] I want the content of the `release-notes/<pull request number>.md` to be be used for the release notes instead of the title.

Reviewed-on: https://codeberg.org/forgejo/forgejo/pulls/4857

Reviewed-by: Earl Warren <earl-warren@noreply.codeberg.org>

Co-authored-by: Yaroslav Halchenko <debian@onerussian.com>

Co-committed-by: Yaroslav Halchenko <debian@onerussian.com>

These are the three conflicted changes from #4716:

* https://github.com/go-gitea/gitea/pull/31632

* https://github.com/go-gitea/gitea/pull/31688

* https://github.com/go-gitea/gitea/pull/31706

cc @earl-warren; as per discussion on https://github.com/go-gitea/gitea/pull/31632 this involves a small compatibility break (OIDC introspection requests now require a valid client ID and secret, instead of a valid OIDC token)

## Checklist

The [developer guide](https://forgejo.org/docs/next/developer/) contains information that will be helpful to first time contributors. There also are a few [conditions for merging Pull Requests in Forgejo repositories](https://codeberg.org/forgejo/governance/src/branch/main/PullRequestsAgreement.md). You are also welcome to join the [Forgejo development chatroom](https://matrix.to/#/#forgejo-development:matrix.org).

### Tests

- I added test coverage for Go changes...

- [ ] in their respective `*_test.go` for unit tests.

- [x] in the `tests/integration` directory if it involves interactions with a live Forgejo server.

### Documentation

- [ ] I created a pull request [to the documentation](https://codeberg.org/forgejo/docs) to explain to Forgejo users how to use this change.

- [ ] I did not document these changes and I do not expect someone else to do it.

### Release notes

- [ ] I do not want this change to show in the release notes.

- [ ] I want the title to show in the release notes with a link to this pull request.

- [ ] I want the content of the `release-notes/<pull request number>.md` to be be used for the release notes instead of the title.

<!--start release-notes-assistant-->

## Draft release notes

<!--URL:https://codeberg.org/forgejo/forgejo-->

- Breaking features

- [PR](https://codeberg.org/forgejo/forgejo/pulls/4724): <!--number 4724 --><!--line 0 --><!--description T0lEQyBpbnRlZ3JhdGlvbnMgdGhhdCBQT1NUIHRvIGAvbG9naW4vb2F1dGgvaW50cm9zcGVjdGAgd2l0aG91dCBzZW5kaW5nIEhUVFAgYmFzaWMgYXV0aGVudGljYXRpb24gd2lsbCBub3cgZmFpbCB3aXRoIGEgNDAxIEhUVFAgVW5hdXRob3JpemVkIGVycm9yLiBUbyBmaXggdGhlIGVycm9yLCB0aGUgY2xpZW50IG11c3QgYmVnaW4gc2VuZGluZyBIVFRQIGJhc2ljIGF1dGhlbnRpY2F0aW9uIHdpdGggYSB2YWxpZCBjbGllbnQgSUQgYW5kIHNlY3JldC4gVGhpcyBlbmRwb2ludCB3YXMgcHJldmlvdXNseSBhdXRoZW50aWNhdGVkIHZpYSB0aGUgaW50cm9zcGVjdGlvbiB0b2tlbiBpdHNlbGYsIHdoaWNoIGlzIGxlc3Mgc2VjdXJlLg==-->OIDC integrations that POST to `/login/oauth/introspect` without sending HTTP basic authentication will now fail with a 401 HTTP Unauthorized error. To fix the error, the client must begin sending HTTP basic authentication with a valid client ID and secret. This endpoint was previously authenticated via the introspection token itself, which is less secure.<!--description-->

<!--end release-notes-assistant-->

Reviewed-on: https://codeberg.org/forgejo/forgejo/pulls/4724

Reviewed-by: Earl Warren <earl-warren@noreply.codeberg.org>

Co-authored-by: Shivaram Lingamneni <slingamn@cs.stanford.edu>

Co-committed-by: Shivaram Lingamneni <slingamn@cs.stanford.edu>

Was facing issues while writing unit tests for federation code. Mocks weren't catching all network calls, because was being out of scope of the mocking infra. Plus, I think we can have more granular tests.

This PR puts the client behind an interface, that can be retrieved from `ctx`. Context doesn't require initialization, as it defaults to the implementation available in-tree. It may be overridden when required (like testing).

## Mechanism

1. Get client factory from `ctx` (factory contains network and crypto parameters that are needed)

2. Initialize client with sender's keys and the receiver's public key

3. Use client as before.

Reviewed-on: https://codeberg.org/forgejo/forgejo/pulls/4853

Reviewed-by: Earl Warren <earl-warren@noreply.codeberg.org>

Co-authored-by: Aravinth Manivannan <realaravinth@batsense.net>

Co-committed-by: Aravinth Manivannan <realaravinth@batsense.net>

- If you have the external issue setting enabled, any reference would

have been rendered as an external issue, however this shouldn't be

happening to references that refer to issues in other repositories.

- Unit test added.

Mastodon with `AUTHORIZED_FETCH` enabled requires the `Host` header to

be signed too, add it to the default for `setting.Federation.GetHeaders`

and `setting.Federation.PostHeaders`.

For this to work, we need to sign the request later: not immediately

after `NewRequest`, but just before sending them out with `client.Do`.

Doing so also lets us use `setting.Federation.GetHeaders` (we were using

`.PostHeaders` even for GET requests before).

Signed-off-by: Gergely Nagy <forgejo@gergo.csillger.hu>

Part of #24256.

Clear up old action logs to free up storage space.

Users will see a message indicating that the log has been cleared if

they view old tasks.

<img width="1361" alt="image"

src="https://github.com/user-attachments/assets/9f0f3a3a-bc5a-402f-90ca-49282d196c22">

Docs: https://gitea.com/gitea/docs/pulls/40

---------

Co-authored-by: silverwind <me@silverwind.io>

(cherry picked from commit 687c1182482ad9443a5911c068b317a91c91d586)

Conflicts:

custom/conf/app.example.ini

routers/web/repo/actions/view.go

trivial context conflict

Fix#31137.

Replace #31623#31697.

When migrating LFS objects, if there's any object that failed (like some

objects are losted, which is not really critical), Gitea will stop

migrating LFS immediately but treat the migration as successful.

This PR checks the error according to the [LFS api

doc](https://github.com/git-lfs/git-lfs/blob/main/docs/api/batch.md#successful-responses).

> LFS object error codes should match HTTP status codes where possible:

>

> - 404 - The object does not exist on the server.

> - 409 - The specified hash algorithm disagrees with the server's

acceptable options.

> - 410 - The object was removed by the owner.

> - 422 - Validation error.

If the error is `404`, it's safe to ignore it and continue migration.

Otherwise, stop the migration and mark it as failed to ensure data

integrity of LFS objects.

And maybe we should also ignore others errors (maybe `410`? I'm not sure

what's the difference between "does not exist" and "removed by the

owner".), we can add it later when some users report that they have

failed to migrate LFS because of an error which should be ignored.

(cherry picked from commit 09b56fc0690317891829906d45c1d645794c63d5)

This is an implementation of a quota engine, and the API routes to

manage its settings. This does *not* contain any enforcement code: this

is just the bedrock, the engine itself.

The goal of the engine is to be flexible and future proof: to be nimble

enough to build on it further, without having to rewrite large parts of

it.

It might feel a little more complicated than necessary, because the goal

was to be able to support scenarios only very few Forgejo instances

need, scenarios the vast majority of mostly smaller instances simply do

not care about. The goal is to support both big and small, and for that,

we need a solid, flexible foundation.

There are thee big parts to the engine: counting quota use, setting

limits, and evaluating whether the usage is within the limits. Sounds

simple on paper, less so in practice!

Quota counting

==============

Quota is counted based on repo ownership, whenever possible, because

repo owners are in ultimate control over the resources they use: they

can delete repos, attachments, everything, even if they don't *own*

those themselves. They can clean up, and will always have the permission

and access required to do so. Would we count quota based on the owning

user, that could lead to situations where a user is unable to free up

space, because they uploaded a big attachment to a repo that has been

taken private since. It's both more fair, and much safer to count quota

against repo owners.

This means that if user A uploads an attachment to an issue opened

against organization O, that will count towards the quota of

organization O, rather than user A.

One's quota usage stats can be queried using the `/user/quota` API

endpoint. To figure out what's eating into it, the

`/user/repos?order_by=size`, `/user/quota/attachments`,

`/user/quota/artifacts`, and `/user/quota/packages` endpoints should be

consulted. There's also `/user/quota/check?subject=<...>` to check

whether the signed-in user is within a particular quota limit.

Quotas are counted based on sizes stored in the database.

Setting quota limits

====================

There are different "subjects" one can limit usage for. At this time,

only size-based limits are implemented, which are:

- `size:all`: As the name would imply, the total size of everything

Forgejo tracks.

- `size:repos:all`: The total size of all repositories (not including

LFS).

- `size:repos:public`: The total size of all public repositories (not

including LFS).

- `size:repos:private`: The total size of all private repositories (not

including LFS).

- `sizeall`: The total size of all git data (including all

repositories, and LFS).

- `sizelfs`: The size of all git LFS data (either in private or

public repos).

- `size:assets:all`: The size of all assets tracked by Forgejo.

- `size:assets:attachments:all`: The size of all kinds of attachments

tracked by Forgejo.

- `size:assets:attachments:issues`: Size of all attachments attached to

issues, including issue comments.

- `size:assets:attachments:releases`: Size of all attachments attached

to releases. This does *not* include automatically generated archives.

- `size:assets:artifacts`: Size of all Action artifacts.

- `size:assets:packages:all`: Size of all Packages.

- `size:wiki`: Wiki size

Wiki size is currently not tracked, and the engine will always deem it

within quota.

These subjects are built into Rules, which set a limit on *all* subjects

within a rule. Thus, we can create a rule that says: "1Gb limit on all

release assets, all packages, and git LFS, combined". For a rule to

stand, the total sum of all subjects must be below the rule's limit.

Rules are in turn collected into groups. A group is just a name, and a

list of rules. For a group to stand, all of its rules must stand. Thus,

if we have a group with two rules, one that sets a combined 1Gb limit on

release assets, all packages, and git LFS, and another rule that sets a

256Mb limit on packages, if the user has 512Mb of packages, the group

will not stand, because the second rule deems it over quota. Similarly,

if the user has only 128Mb of packages, but 900Mb of release assets, the

group will not stand, because the combined size of packages and release

assets is over the 1Gb limit of the first rule.

Groups themselves are collected into Group Lists. A group list stands

when *any* of the groups within stand. This allows an administrator to

set conservative defaults, but then place select users into additional

groups that increase some aspect of their limits.

To top it off, it is possible to set the default quota groups a user

belongs to in `app.ini`. If there's no explicit assignment, the engine

will use the default groups. This makes it possible to avoid having to

assign each and every user a list of quota groups, and only those need

to be explicitly assigned who need a different set of groups than the

defaults.

If a user has any quota groups assigned to them, the default list will

not be considered for them.

The management APIs

===================

This commit contains the engine itself, its unit tests, and the quota

management APIs. It does not contain any enforcement.

The APIs are documented in-code, and in the swagger docs, and the

integration tests can serve as an example on how to use them.

Signed-off-by: Gergely Nagy <forgejo@gergo.csillger.hu>

- It's possible to detect if refresh tokens are used more than once, if

it's used more than it's a indication of a replay attack and it should

invalidate the associated access token. This behavior is controlled by

the `INVALIDATE_REFRESH_TOKENS` setting.

- Altough in a normal scenario where TLS is being used, it should be

very hard to get to situation where replay attacks are being used, but

this is better safe than sorry.

- Enable `INVALIDATE_REFRESH_TOKENS` by default.

* Closes https://codeberg.org/forgejo/forgejo/issues/4563

* A followup to my 2024-February investigation in the Localization room

* Restore Malayalam and Serbian locales that were deleted in 067b0c2664 and f91092453e. Bulgarian was also deleted, but we already have better Bulgarian translation.

* Remove ml-IN from the language selector. It was not usable for 1.5 years, has ~18% completion and was not maintained in those ~1.5 years. It could also have placeholder bugs due to refactors.

Restoring files gives the translators a base to work with and makes the project advertised on Weblate homepage for logged in users in the Suggestions tab. Unlike Gitea, we store our current translations directly in the repo and not on a separate platform, so it makes sense to add these files back.

Removing selector entry avoids bugs and user confusion. I will make a followup for the documentation.

Reviewed-on: https://codeberg.org/forgejo/forgejo/pulls/4576

Reviewed-by: twenty-panda <twenty-panda@noreply.codeberg.org>

Make it posible to let mails show e.g.:

`Max Musternam (via gitea.kithara.com) <gitea@kithara.com>`

Docs: https://gitea.com/gitea/docs/pulls/23

---

*Sponsored by Kithara Software GmbH*

(cherry picked from commit 0f533241829d0d48aa16a91e7dc0614fe50bc317)

Conflicts:

- services/mailer/mail_release.go

services/mailer/mail_test.go

In both cases, applied the changes manually.

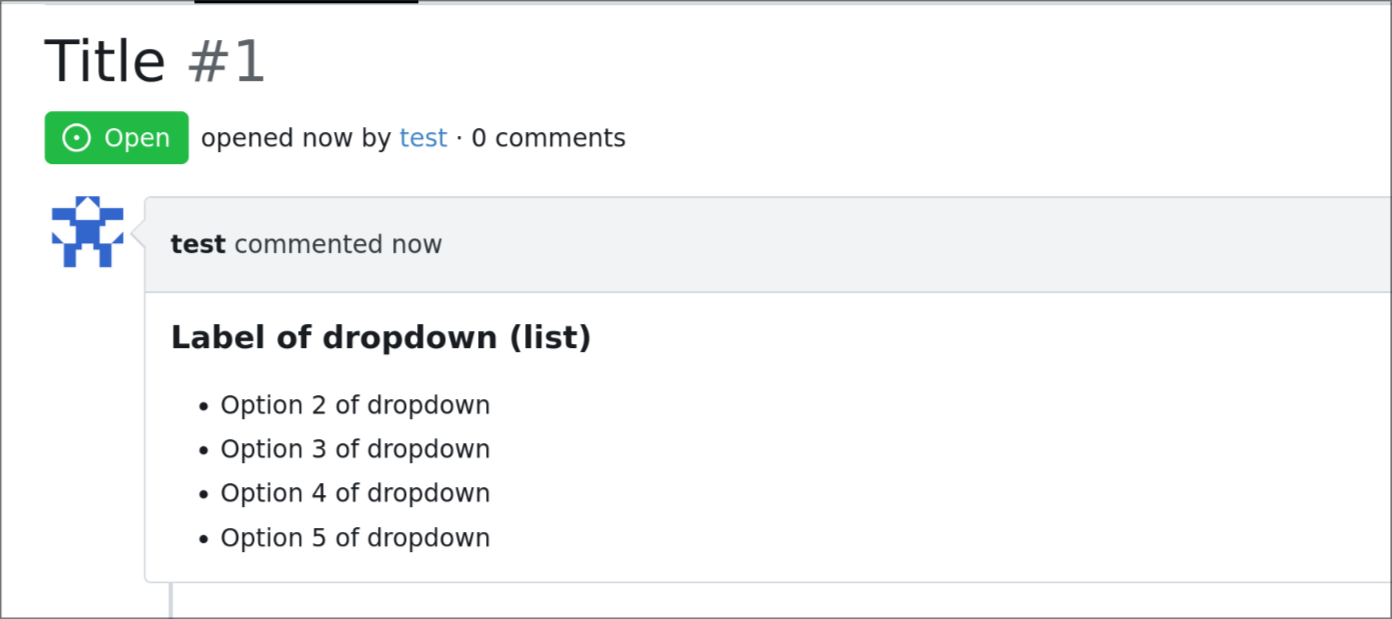

Issue template dropdown can have many entries, and it could be better to

have them rendered as list later on if multi-select is enabled.

so this adds an option to the issue template engine to do so.

DOCS: https://gitea.com/gitea/docs/pulls/19

---

## demo:

```yaml

name: Name

title: Title

about: About

labels: ["label1", "label2"]

ref: Ref

body:

- type: dropdown

id: id6

attributes:

label: Label of dropdown (list)

description: Description of dropdown

multiple: true

list: true

options:

- Option 1 of dropdown

- Option 2 of dropdown

- Option 3 of dropdown

- Option 4 of dropdown

- Option 5 of dropdown

- Option 6 of dropdown

- Option 7 of dropdown

- Option 8 of dropdown

- Option 9 of dropdown

```

---

*Sponsored by Kithara Software GmbH*

(cherry picked from commit 1064e817c4a6fa6eb5170143150505503c4ef6ed)

- On a empty blockquote the callout feature would panic, as it expects

to always have at least one child.

- This panic cannot result in a DoS, because any panic that happens

while rendering any markdown input will be recovered gracefully.

- Adds a simple condition to avoid this panic.

- Update the `github.com/santhosh-tekuri/jsonschema` library from v5 to

v6.

- Update the migration loader function to a type, which is now required

in V6.

- `github.com/santhosh-tekuri/jsonschema/v6` was already used by gof3,

so removing the v5 library and using the v6 library reduces the binary

size of Forgejo.

- Before: 95912040 bytes

- After: 95706152 bytes

Running git update-index for every individual file is slow, so add and

remove everything with a single git command.

When such a big commit lands in the default branch, it could cause PR

creation and patch checking for all open PRs to be slow, or time out

entirely. For example, a commit that removes 1383 files was measured to

take more than 60 seconds and timed out. With this change checking took

about a second.

This is related to #27967, though this will not help with commits that

change many lines in few files.

(cherry picked from commit b88e5fc72d99e9d4a0aa9c13f70e0a9e967fe057)

- Remove a unused dependency. This dependency was added to handle YAML

'frontmatter' meta, parsing them and converting them to a table or

details in the resulting HTML. As can be read in the issue that reported

the behavior of YAML frontmatter being rendered literally,

https://github.com/go-gitea/gitea/issues/5377.

- It's an unused dependency as the codebase since then moved on to do this YAML

parsing and rendering on their own, this was implemented in

812cfd0ad9.

- Adds unit tests that was related to this functionality, to proof the

codebase already handles this and to prevent regressions.

- Update the `github.com/buildkite/terminal-to-html/v3` dependency from

version v3.10.1 to v3.13.0.

- Version v3.12.0 introduced an incompatible change, the return type of

`AsHTML` changed from `[]byte` to `string`. That same version also

introduced streaming mode

https://github.com/buildkite/terminal-to-html/pull/126, which allows us

to avoid reading the whole input into memory.

- Closes#4313

A test must not fail depending on the performance of the machine it

runs on. It creates false negative and serves no purpose. These are

not benchmark tests for the hardware running them.

If a repository has

git config --add push.pushOption submit=".sourcehut/*.yml"

it failed when pushed because of the unknown submit push

option. It will be ignored instead.

Filtering out the push options is done in an earlier stage, when the

hook command runs, before it submits the options map to the private

endpoint.

* move all the push options logic to modules/git/pushoptions

* add 100% test coverage for modules/git/pushoptions

Test coverage for the code paths from which code was moved to the

modules/git/pushoptions package:

* cmd/hook.go:runHookPreReceive

* routers/private/hook_pre_receive.go:validatePushOptions

tests/integration/git_push_test.go:TestOptionsGitPush runs through

both. The test verifying the option is rejected was removed and, if

added again, will fail because the option is now ignored instead of

being rejected.

* cmd/hook.go:runHookProcReceive

* services/agit/agit.go:ProcReceive

tests/integration/git_test.go: doCreateAgitFlowPull runs through

both. It uses variations of AGit related push options.

* cmd/hook.go:runHookPostReceive

* routers/private/hook_post_receive.go:HookPostReceive

tests/integration/git_test.go:doPushCreate called by TestGit/HTTP/sha1/PushCreate

runs through both.

Note that although it provides coverage for this code path it does not use push options.

Fixes: https://codeberg.org/forgejo/forgejo/issues/3651

Support legacy _links LFS batch response.

Fixes#31512.

This is backwards-compatible change to the LFS client so that, upon

mirroring from an upstream which has a batch api, it can download

objects whether the responses contain the `_links` field or its

successor the `actions` field. When Gitea must fallback to the legacy

`_links` field a logline is emitted at INFO level which looks like this:

```

...s/lfs/http_client.go:188:performOperation() [I] <LFSPointer ee95d0a27ccdfc7c12516d4f80dcf144a5eaf10d0461d282a7206390635cdbee:160> is using a deprecated batch schema response!

```

I've only run `test-backend` with this code, but added a new test to

cover this case. Additionally I have a fork with this change deployed

which I've confirmed syncs LFS from Gitea<-Artifactory (which has legacy

`_links`) as well as from Gitea<-Gitea (which has the modern `actions`).

Signed-off-by: Royce Remer <royceremer@gmail.com>

(cherry picked from commit df805d6ed0458dbec258d115238fde794ed4d0ce)

Closes#2797

I'm aware of https://github.com/go-gitea/gitea/pull/28163 exists, but since I had it laying around on my drive and collecting dust, I might as well open a PR for it if anyone wants the feature a bit sooner than waiting for upstream to release it or to be a forgejo "native" implementation.

This PR Contains:

- Support for the `workflow_dispatch` trigger

- Inputs: boolean, string, number, choice

Things still to be done:

- [x] API Endpoint `/api/v1/<org>/<repo>/actions/workflows/<workflow id>/dispatches`

- ~~Fixing some UI bugs I had no time figuring out, like why dropdown/choice inputs's menu's behave weirdly~~ Unrelated visual bug with dropdowns inside dropdowns

- [x] Fix bug where opening the branch selection submits the form

- [x] Limit on inputs to render/process

Things not in this PR:

- Inputs: environment (First need support for environments in forgejo)

Things needed to test this:

- A patch for https://code.forgejo.org/forgejo/runner to actually consider the inputs inside the workflow.

~~One possible patch can be seen here: https://code.forgejo.org/Mai-Lapyst/runner/src/branch/support-workflow-inputs~~

[PR](https://code.forgejo.org/forgejo/runner/pulls/199)

## Testing

- Checkout PR

- Setup new development runner with [this PR](https://code.forgejo.org/forgejo/runner/pulls/199)

- Create a repo with a workflow (see below)

- Go to the actions tab, select the workflow and see the notice as in the screenshot above

- Use the button + dropdown to run the workflow

- Try also running it via the api using the `` endpoint

- ...

- Profit!

<details>

<summary>Example workflow</summary>

```yaml

on:

workflow_dispatch:

inputs:

logLevel:

description: 'Log Level'

required: true

default: 'warning'

type: choice

options:

- info

- warning

- debug

tags:

description: 'Test scenario tags'

required: false

type: boolean

boolean_default_true:

description: 'Test scenario tags'

required: true

type: boolean

default: true

boolean_default_false:

description: 'Test scenario tags'

required: false

type: boolean

default: false

number1_default:

description: 'Number w. default'

default: '100'

type: number

number2:

description: 'Number w/o. default'

type: number

string1_default:

description: 'String w. default'

default: 'Hello world'

type: string

string2:

description: 'String w/o. default'

required: true

type: string

jobs:

test:

runs-on: docker

steps:

- uses: actions/checkout@v3

- run: whoami

- run: cat /etc/issue

- run: uname -a

- run: date

- run: echo ${{ inputs.logLevel }}

- run: echo ${{ inputs.tags }}

- env:

GITHUB_CONTEXT: ${{ toJson(github) }}

run: echo "$GITHUB_CONTEXT"

- run: echo "abc"

```

</details>

Reviewed-on: https://codeberg.org/forgejo/forgejo/pulls/3334

Reviewed-by: Earl Warren <earl-warren@noreply.codeberg.org>

Co-authored-by: Mai-Lapyst <mai-lapyst@noreply.codeberg.org>

Co-committed-by: Mai-Lapyst <mai-lapyst@noreply.codeberg.org>

This PR modifies the structs for editing and creating org teams to allow

team names to be up to 255 characters. The previous maximum length was

30 characters.

(cherry picked from commit 1c26127b520858671ce257c7c9ab978ed1e95252)

Fix adopt repository has empty object name in database (#31333)

Fix#31330Fix#31311

A workaround to fix the old database is to update object_format_name to

`sha1` if it's empty or null.

(cherry picked from commit 1968c2222dcf47ebd1697afb4e79a81e74702d31)

With tests services/repository/adopt_test.go

Add tag protection manage via rest API.

---------

Co-authored-by: Alexander Kogay <kogay.a@citilink.ru>

Co-authored-by: Giteabot <teabot@gitea.io>

(cherry picked from commit d4e4226c3cbfa62a6adf15f4466747468eb208c7)

Conflicts:

modules/structs/repo_tag.go

trivial context conflict

templates/swagger/v1_json.tmpl

fixed with make generate-swagger

Fix#31327

This is a quick patch to fix the bug.

Some parameters are using 0, some are using -1. I think it needs a

refactor to keep consistent. But that will be another PR.

(cherry picked from commit e4abaff7ffbbc5acd3aa668a9c458fbdf76f9573)

The PR replaces all `goldmark/util.BytesToReadOnlyString` with

`util.UnsafeBytesToString`, `goldmark/util.StringToReadOnlyBytes` with

`util.UnsafeStringToBytes`. This removes one `TODO`.

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

(cherry picked from commit 1761459ebc7eb6d432eced093b4583425a5c5d4b)

Fix a hash render problem like `<hash>: xxxxx` which is usually used in

release notes.

(cherry picked from commit 7115dce773e3021b3538ae360c4e7344d5bbf45b)

Fix#31330Fix#31311

A workaround to fix the old database is to update object_format_name to

`sha1` if it's empty or null.

(cherry picked from commit 1968c2222dcf47ebd1697afb4e79a81e74702d31)

Enable [unparam](https://github.com/mvdan/unparam) linter.

Often I could not tell the intention why param is unused, so I put

`//nolint` for those cases like webhook request creation functions never

using `ctx`.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: delvh <dev.lh@web.de>

(cherry picked from commit fc2d75f86d77b022ece848acf2581c14ef21d43b)

Conflicts:

modules/setting/config_env.go

modules/storage/azureblob.go

services/webhook/dingtalk.go

services/webhook/discord.go

services/webhook/feishu.go

services/webhook/matrix.go

services/webhook/msteams.go

services/webhook/packagist.go

services/webhook/slack.go

services/webhook/telegram.go

services/webhook/wechatwork.go

run make lint-go and fix Forgejo specific warnings

use proper http time format than replacing with GMT in time.RFC1123 =)

Reviewed-on: https://codeberg.org/forgejo/forgejo/pulls/4132

Reviewed-by: Earl Warren <earl-warren@noreply.codeberg.org>

Co-authored-by: Shiny Nematoda <snematoda.751k2@aleeas.com>

Co-committed-by: Shiny Nematoda <snematoda.751k2@aleeas.com>

This solution implements a new config variable MAX_ROWS, which

corresponds to the “Maximum allowed rows to render CSV files. (0 for no

limit)” and rewrites the Render function for CSV files in markup module.

Now the render function only reads the file once, having MAX_FILE_SIZE+1

as a reader limit and MAX_ROWS as a row limit. When the file is larger

than MAX_FILE_SIZE or has more rows than MAX_ROWS, it only renders until

the limit, and displays a user-friendly warning informing that the

rendered data is not complete, in the user's language.

---

Previously, when a CSV file was larger than the limit, the render

function lost its function to render the code. There were also multiple

reads to the file, in order to determine its size and render or

pre-render.

The warning:

(cherry picked from commit f7125ab61aaa02fd4c7ab0062a2dc9a57726e2ec)

Add option to override headers of mails, gitea send out

---

*Sponsored by Kithara Software GmbH*

(cherry picked from commit aace3bccc3290446637cac30b121b94b5d03075f)

Conflicts:

docs/content/administration/config-cheat-sheet.en-us.md

does not exist in Forgejo

services/mailer/mailer_test.go

trivial context conflict

Resolves#31131.

It uses the the go-swagger `enum` property to document the activity

action types.

(cherry picked from commit cb27c438a82fec9f2476f6058bc5dcda2617aab5)

This is a PR for #3616

Currently added a new optional config `SLOGAN` in ini file. When this config is set title page is modified in APP_NAME [ - SLOGAN]

Example in image below

Add the new config value in the admin settings page (readonly)

## TODO

* [x] Add the possibility to add the `SLOGAN` config from the installation form

* [ ] Update https://forgejo.org/docs/next/admin/config-cheat-sheet

Reviewed-on: https://codeberg.org/forgejo/forgejo/pulls/3752

Reviewed-by: 0ko <0ko@noreply.codeberg.org>

Reviewed-by: Earl Warren <earl-warren@noreply.codeberg.org>

Co-authored-by: mirko <mirko.perillo@gmail.com>

Co-committed-by: mirko <mirko.perillo@gmail.com>

This PR adds some fields to the gitea webhook payload that

[openproject](https://www.openproject.org/) expects to exists in order

to process the webhooks.

These fields do exists in Github's webhook payload so adding them makes

Gitea's native webhook more compatible towards Github's.

Renovate tried to update redis/go-redis, but failed because they changes

the interface, they added two new functions: `BitFieldRO` and

`ObjectFreq`.

Changes:

- Update redis/go-redis

- Run mockgen:

```

mockgen -package mock -destination ./modules/queue/mock/redisuniversalclient.go github.com/redis/go-redis/v9 UniversalClient

```

References:

- https://codeberg.org/forgejo/forgejo/pulls/4009

This updates the mapping definition of the elasticsearch issue indexer backend to use `long` instead of `integer`s wherever the go type is a `int64`. Without it larger instances could run into an issue.

Reviewed-on: https://codeberg.org/forgejo/forgejo/pulls/3982

Reviewed-by: Earl Warren <earl-warren@noreply.codeberg.org>

Co-authored-by: Mai-Lapyst <mai-lapyst@noreply.codeberg.org>

Co-committed-by: Mai-Lapyst <mai-lapyst@noreply.codeberg.org>

It is fine to use MockVariableValue to change a setting such as:

defer test.MockVariableValue(&setting.Mirror.Enabled, true)()

But when testing for errors and mocking a function, multiple variants

of the functions will be used, not just one. MockProtect a function

will make sure that when the test fails it always restores a sane

version of the function. For instance:

defer test.MockProtect(&mirror_service.AddPushMirrorRemote)()

mirror_service.AddPushMirrorRemote = mockOne

do some tests that may fail

mirror_service.AddPushMirrorRemote = mockTwo

do more tests that may fail