## Changes

- Adds the following high level access scopes, each with `read` and

`write` levels:

- `activitypub`

- `admin` (hidden if user is not a site admin)

- `misc`

- `notification`

- `organization`

- `package`

- `issue`

- `repository`

- `user`

- Adds new middleware function `tokenRequiresScopes()` in addition to

`reqToken()`

- `tokenRequiresScopes()` is used for each high-level api section

- _if_ a scoped token is present, checks that the required scope is

included based on the section and HTTP method

- `reqToken()` is used for individual routes

- checks that required authentication is present (but does not check

scope levels as this will already have been handled by

`tokenRequiresScopes()`

- Adds migration to convert old scoped access tokens to the new set of

scopes

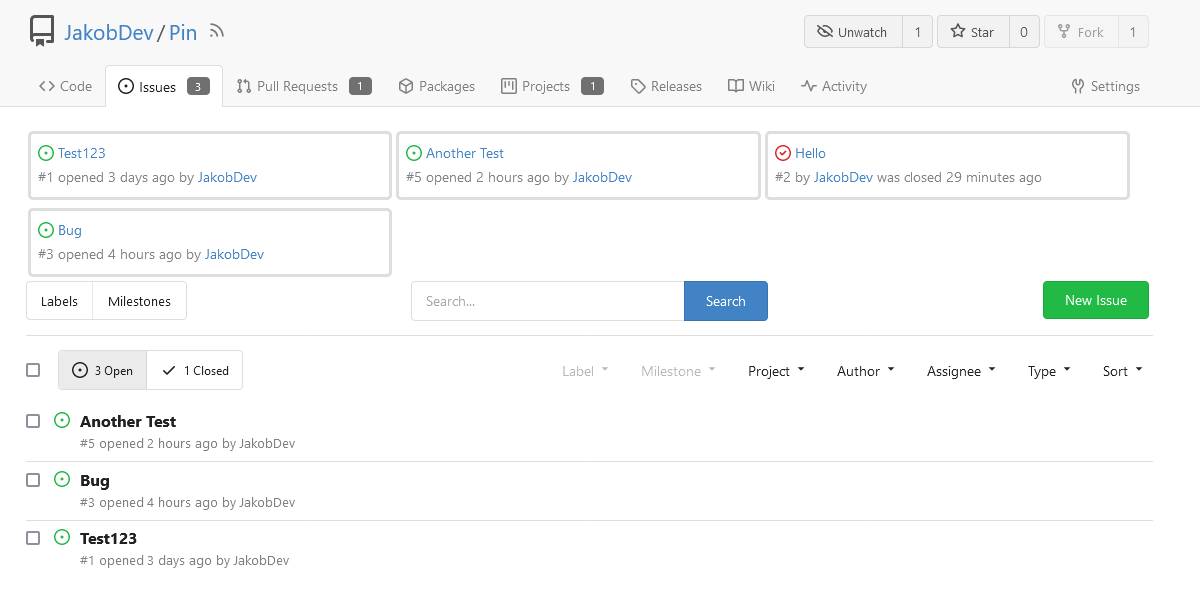

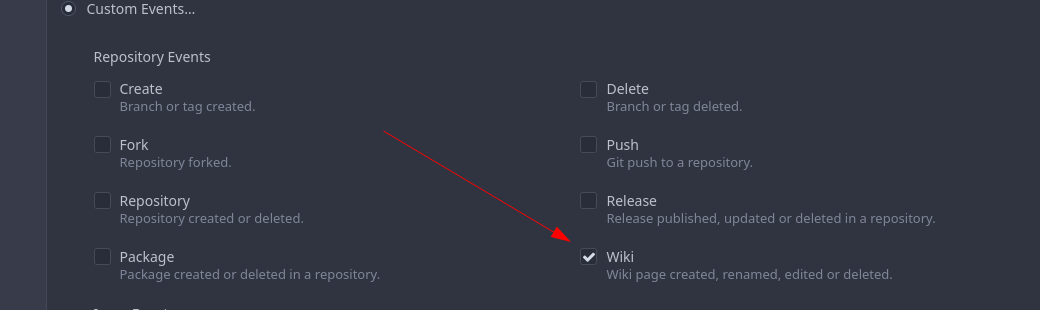

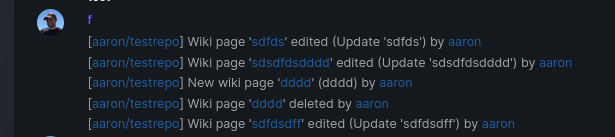

- Updates the user interface for scope selection

### User interface example

<img width="903" alt="Screen Shot 2023-05-31 at 1 56 55 PM"

src="https://github.com/go-gitea/gitea/assets/23248839/654766ec-2143-4f59-9037-3b51600e32f3">

<img width="917" alt="Screen Shot 2023-05-31 at 1 56 43 PM"

src="https://github.com/go-gitea/gitea/assets/23248839/1ad64081-012c-4a73-b393-66b30352654c">

## tokenRequiresScopes Design Decision

- `tokenRequiresScopes()` was added to more reliably cover api routes.

For an incoming request, this function uses the given scope category

(say `AccessTokenScopeCategoryOrganization`) and the HTTP method (say

`DELETE`) and verifies that any scoped tokens in use include

`delete:organization`.

- `reqToken()` is used to enforce auth for individual routes that

require it. If a scoped token is not present for a request,

`tokenRequiresScopes()` will not return an error

## TODO

- [x] Alphabetize scope categories

- [x] Change 'public repos only' to a radio button (private vs public).

Also expand this to organizations

- [X] Disable token creation if no scopes selected. Alternatively, show

warning

- [x] `reqToken()` is missing from many `POST/DELETE` routes in the api.

`tokenRequiresScopes()` only checks that a given token has the correct

scope, `reqToken()` must be used to check that a token (or some other

auth) is present.

- _This should be addressed in this PR_

- [x] The migration should be reviewed very carefully in order to

minimize access changes to existing user tokens.

- _This should be addressed in this PR_

- [x] Link to api to swagger documentation, clarify what

read/write/delete levels correspond to

- [x] Review cases where more than one scope is needed as this directly

deviates from the api definition.

- _This should be addressed in this PR_

- For example:

```go

m.Group("/users/{username}/orgs", func() {

m.Get("", reqToken(), org.ListUserOrgs)

m.Get("/{org}/permissions", reqToken(), org.GetUserOrgsPermissions)

}, tokenRequiresScopes(auth_model.AccessTokenScopeCategoryUser,

auth_model.AccessTokenScopeCategoryOrganization),

context_service.UserAssignmentAPI())

```

## Future improvements

- [ ] Add required scopes to swagger documentation

- [ ] Redesign `reqToken()` to be opt-out rather than opt-in

- [ ] Subdivide scopes like `repository`

- [ ] Once a token is created, if it has no scopes, we should display

text instead of an empty bullet point

- [ ] If the 'public repos only' option is selected, should read

categories be selected by default

Closes#24501Closes#24799

Co-authored-by: Jonathan Tran <jon@allspice.io>

Co-authored-by: Kyle D <kdumontnu@gmail.com>

Co-authored-by: silverwind <me@silverwind.io>

Before, Gitea shows the database table stats on the `admin dashboard`

page.

It has some problems:

* `count(*)` is quite heavy. If tables have many records, this blocks

loading the admin page blocks for a long time

* Some users had even reported issues that they can't visit their admin

page because this page causes blocking or `50x error (reverse proxy

timeout)`

* The `actions` stat is not useful. The table is simply too large. Does

it really matter if it contains 1,000,000 rows or 9,999,999 rows?

* The translation `admin.dashboard.statistic_info` is difficult to

maintain.

So, this PR uses a separate page to show the stats and removes the

`actions` stat.

## ⚠️ BREAKING

The `actions` Prometheus metrics collector has been removed for the

reasons mentioned beforehand.

Please do not rely on its output anymore.

This addressees some things from #24406 that came up after the PR was

merged. Mostly from @delvh.

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: delvh <dev.lh@web.de>

This PR replaces all string refName as a type `git.RefName` to make the

code more maintainable.

Fix#15367

Replaces #23070

It also fixed a bug that tags are not sync because `git remote --prune

origin` will not remove local tags if remote removed.

We in fact should use `git fetch --prune --tags origin` but not `git

remote update origin` to do the sync.

Some answer from ChatGPT as ref.

> If the git fetch --prune --tags command is not working as expected,

there could be a few reasons why. Here are a few things to check:

>

>Make sure that you have the latest version of Git installed on your

system. You can check the version by running git --version in your

terminal. If you have an outdated version, try updating Git and see if

that resolves the issue.

>

>Check that your Git repository is properly configured to track the

remote repository's tags. You can check this by running git config

--get-all remote.origin.fetch and verifying that it includes

+refs/tags/*:refs/tags/*. If it does not, you can add it by running git

config --add remote.origin.fetch "+refs/tags/*:refs/tags/*".

>

>Verify that the tags you are trying to prune actually exist on the

remote repository. You can do this by running git ls-remote --tags

origin to list all the tags on the remote repository.

>

>Check if any local tags have been created that match the names of tags

on the remote repository. If so, these local tags may be preventing the

git fetch --prune --tags command from working properly. You can delete

local tags using the git tag -d command.

---------

Co-authored-by: delvh <dev.lh@web.de>

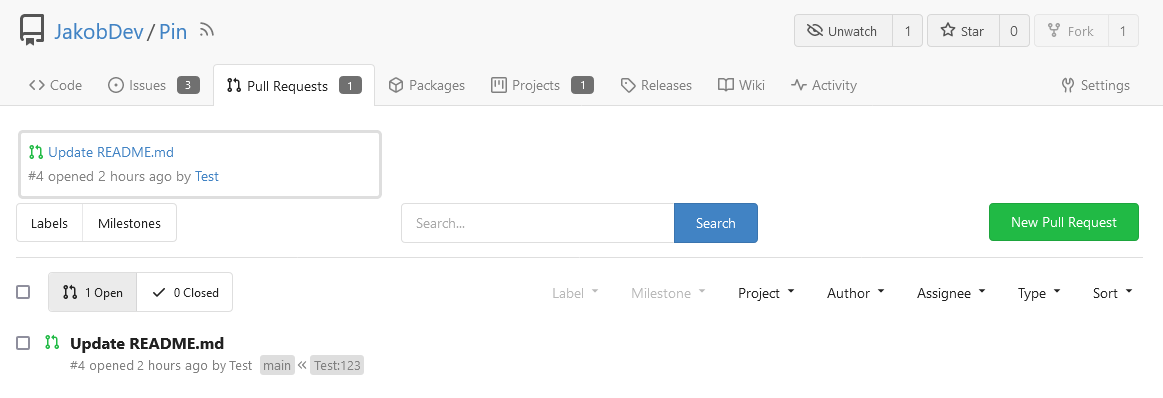

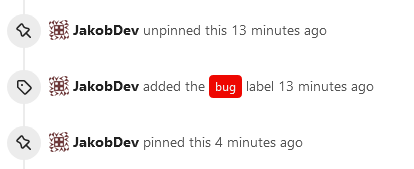

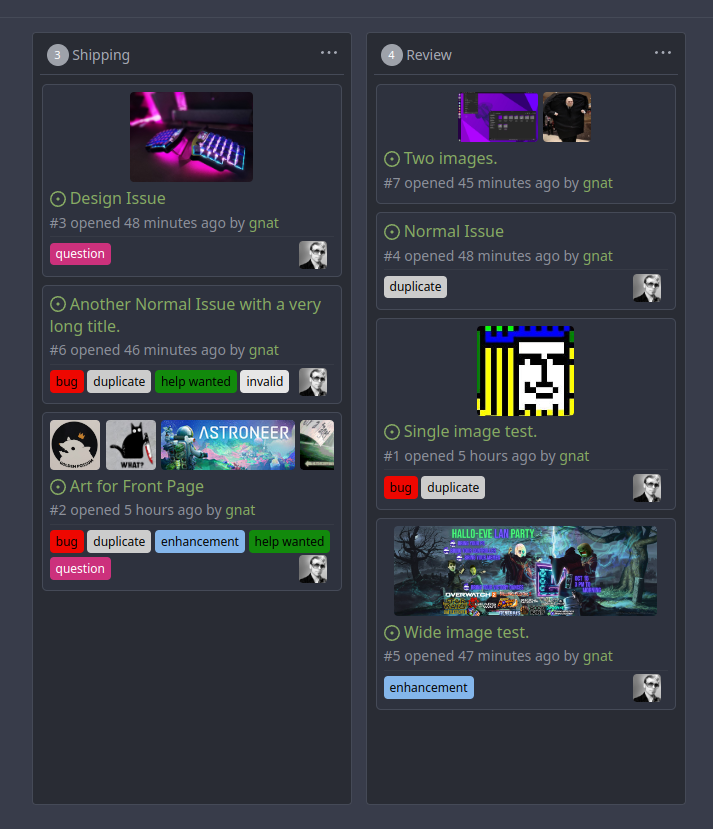

This adds the ability to pin important Issues and Pull Requests. You can

also move pinned Issues around to change their Position. Resolves#2175.

## Screenshots

The Design was mostly copied from the Projects Board.

## Implementation

This uses a new `pin_order` Column in the `issue` table. If the value is

set to 0, the Issue is not pinned. If it's set to a bigger value, the

value is the Position. 1 means it's the first pinned Issue, 2 means it's

the second one etc. This is dived into Issues and Pull requests for each

Repo.

## TODO

- [x] You can currently pin as many Issues as you want. Maybe we should

add a Limit, which is configurable. GitHub uses 3, but I prefer 6, as

this is better for bigger Projects, but I'm open for suggestions.

- [x] Pin and Unpin events need to be added to the Issue history.

- [x] Tests

- [x] Migration

**The feature itself is currently fully working, so tester who may find

weird edge cases are very welcome!**

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

close https://github.com/go-gitea/gitea/issues/16321

Provided a webhook trigger for requesting someone to review the Pull

Request.

Some modifications have been made to the returned `PullRequestPayload`

based on the GitHub webhook settings, including:

- add a description of the current reviewer object as

`RequestedReviewer` .

- setting the action to either **review_requested** or

**review_request_removed** based on the operation.

- adding the `RequestedReviewers` field to the issues_model.PullRequest.

This field will be loaded into the PullRequest through

`LoadRequestedReviewers()` when `ToAPIPullRequest` is called.

After the Pull Request is merged, I will supplement the relevant

documentation.

## ⚠️ Breaking

The `log.<mode>.<logger>` style config has been dropped. If you used it,

please check the new config manual & app.example.ini to make your

instance output logs as expected.

Although many legacy options still work, it's encouraged to upgrade to

the new options.

The SMTP logger is deleted because SMTP is not suitable to collect logs.

If you have manually configured Gitea log options, please confirm the

logger system works as expected after upgrading.

## Description

Close#12082 and maybe more log-related issues, resolve some related

FIXMEs in old code (which seems unfixable before)

Just like rewriting queue #24505 : make code maintainable, clear legacy

bugs, and add the ability to support more writers (eg: JSON, structured

log)

There is a new document (with examples): `logging-config.en-us.md`

This PR is safer than the queue rewriting, because it's just for

logging, it won't break other logic.

## The old problems

The logging system is quite old and difficult to maintain:

* Unclear concepts: Logger, NamedLogger, MultiChannelledLogger,

SubLogger, EventLogger, WriterLogger etc

* Some code is diffuclt to konw whether it is right:

`log.DelNamedLogger("console")` vs `log.DelNamedLogger(log.DEFAULT)` vs

`log.DelLogger("console")`

* The old system heavily depends on ini config system, it's difficult to

create new logger for different purpose, and it's very fragile.

* The "color" trick is difficult to use and read, many colors are

unnecessary, and in the future structured log could help

* It's difficult to add other log formats, eg: JSON format

* The log outputer doesn't have full control of its goroutine, it's

difficult to make outputer have advanced behaviors

* The logs could be lost in some cases: eg: no Fatal error when using

CLI.

* Config options are passed by JSON, which is quite fragile.

* INI package makes the KEY in `[log]` section visible in `[log.sub1]`

and `[log.sub1.subA]`, this behavior is quite fragile and would cause

more unclear problems, and there is no strong requirement to support

`log.<mode>.<logger>` syntax.

## The new design

See `logger.go` for documents.

## Screenshot

<details>

</details>

## TODO

* [x] add some new tests

* [x] fix some tests

* [x] test some sub-commands (manually ....)

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Giteabot <teabot@gitea.io>

This PR is a refactor at the beginning. And now it did 4 things.

- [x] Move renaming organizaiton and user logics into services layer and

merged as one function

- [x] Support rename a user capitalization only. For example, rename the

user from `Lunny` to `lunny`. We just need to change one table `user`

and others should not be touched.

- [x] Before this PR, some renaming were missed like `agit`

- [x] Fix bug the API reutrned from `http.StatusNoContent` to `http.StatusOK`

The topics are saved in the `repo_topic` table.

They are also saved directly in the `repository` table.

Before this PR, only `AddTopic` and `SaveTopics` made sure the `topics`

field in the `repository` table was synced with the `repo_topic` table.

This PR makes sure `GenerateTopics` and `DeleteTopic`

also sync the `topics` in the repository table.

`RemoveTopicsFromRepo` doesn't need to sync the data

as it is only used to delete a repository.

Fixes#24820

This PR

- [x] Move some functions from `issues.go` to `issue_stats.go` and

`issue_label.go`

- [x] Remove duplicated issue options `UserIssueStatsOption` to keep

only one `IssuesOptions`

This PR

- [x] Move some code from `issue.go` to `issue_search.go` and

`issue_update.go`

- [x] Use `IssuesOptions` instead of `IssueStatsOptions` becuase they

are too similiar.

- [x] Rename some functions

fix#12192 Support SSH for go get

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: Giteabot <teabot@gitea.io>

Co-authored-by: mfk <mfk@hengwei.com.cn>

Co-authored-by: silverwind <me@silverwind.io>

Fix#21324

In the current logic, if the `Actor` user is not an admin user, all

activities from private organizations won't be shown even if the `Actor`

user is a member of the organization.

As mentioned in the issue, when using deploy key to make a commit and

push, the activity's `act_user_id` will be the id of the organization so

the activity won't be shown to non-admin users because the visibility of

the organization is private.

55a5717760/models/activities/action.go (L490-L503)

This PR improves this logic so the activities of private organizations

can be shown.

Fixes#24145

To solve the bug, I added a "computed" `TargetBehind` field to the

`Release` model, which indicates the target branch of a release.

This is particularly useful if the target branch was deleted in the

meantime (or is empty).

I also did a micro-optimization in `calReleaseNumCommitsBehind`. Instead

of checking that a branch exists and then call `GetBranchCommit`, I

immediately call `GetBranchCommit` and handle the `git.ErrNotExist`

error.

This optimization is covered by the added unit test.

# ⚠️ Breaking

Many deprecated queue config options are removed (actually, they should

have been removed in 1.18/1.19).

If you see the fatal message when starting Gitea: "Please update your

app.ini to remove deprecated config options", please follow the error

messages to remove these options from your app.ini.

Example:

```

2023/05/06 19:39:22 [E] Removed queue option: `[indexer].ISSUE_INDEXER_QUEUE_TYPE`. Use new options in `[queue.issue_indexer]`

2023/05/06 19:39:22 [E] Removed queue option: `[indexer].UPDATE_BUFFER_LEN`. Use new options in `[queue.issue_indexer]`

2023/05/06 19:39:22 [F] Please update your app.ini to remove deprecated config options

```

Many options in `[queue]` are are dropped, including:

`WRAP_IF_NECESSARY`, `MAX_ATTEMPTS`, `TIMEOUT`, `WORKERS`,

`BLOCK_TIMEOUT`, `BOOST_TIMEOUT`, `BOOST_WORKERS`, they can be removed

from app.ini.

# The problem

The old queue package has some legacy problems:

* complexity: I doubt few people could tell how it works.

* maintainability: Too many channels and mutex/cond are mixed together,

too many different structs/interfaces depends each other.

* stability: due to the complexity & maintainability, sometimes there

are strange bugs and difficult to debug, and some code doesn't have test

(indeed some code is difficult to test because a lot of things are mixed

together).

* general applicability: although it is called "queue", its behavior is

not a well-known queue.

* scalability: it doesn't seem easy to make it work with a cluster

without breaking its behaviors.

It came from some very old code to "avoid breaking", however, its

technical debt is too heavy now. It's a good time to introduce a better

"queue" package.

# The new queue package

It keeps using old config and concept as much as possible.

* It only contains two major kinds of concepts:

* The "base queue": channel, levelqueue, redis

* They have the same abstraction, the same interface, and they are

tested by the same testing code.

* The "WokerPoolQueue", it uses the "base queue" to provide "worker

pool" function, calls the "handler" to process the data in the base

queue.

* The new code doesn't do "PushBack"

* Think about a queue with many workers, the "PushBack" can't guarantee

the order for re-queued unhandled items, so in new code it just does

"normal push"

* The new code doesn't do "pause/resume"

* The "pause/resume" was designed to handle some handler's failure: eg:

document indexer (elasticsearch) is down

* If a queue is paused for long time, either the producers blocks or the

new items are dropped.

* The new code doesn't do such "pause/resume" trick, it's not a common

queue's behavior and it doesn't help much.

* If there are unhandled items, the "push" function just blocks for a

few seconds and then re-queue them and retry.

* The new code doesn't do "worker booster"

* Gitea's queue's handlers are light functions, the cost is only the

go-routine, so it doesn't make sense to "boost" them.

* The new code only use "max worker number" to limit the concurrent

workers.

* The new "Push" never blocks forever

* Instead of creating more and more blocking goroutines, return an error

is more friendly to the server and to the end user.

There are more details in code comments: eg: the "Flush" problem, the

strange "code.index" hanging problem, the "immediate" queue problem.

Almost ready for review.

TODO:

* [x] add some necessary comments during review

* [x] add some more tests if necessary

* [x] update documents and config options

* [x] test max worker / active worker

* [x] re-run the CI tasks to see whether any test is flaky

* [x] improve the `handleOldLengthConfiguration` to provide more

friendly messages

* [x] fine tune default config values (eg: length?)

## Code coverage:

The "modules/context.go" is too large to maintain.

This PR splits it to separate files, eg: context_request.go,

context_response.go, context_serve.go

This PR will help:

1. The future refactoring for Gitea's web context (eg: simplify the context)

2. Introduce proper "range request" support

3. Introduce context function

This PR only moves code, doesn't change any logic.

Close#24213

Replace #23830

#### Cause

- Before, in order to making PR can get latest commit after reopening,

the `ref`(${REPO_PATH}/refs/pull/${PR_INDEX}/head) of evrey closed PR

will be updated when pushing commits to the `head branch` of the closed

PR.

#### Changes

- For closed PR , won't perform these behavior: insert`comment`, push

`notification` (UI and email), exectue

[pushToBaseRepo](7422503341/services/pull/pull.go (L409))

function and trigger `action` any more when pushing to the `head branch`

of the closed PR.

- Refresh the reference of the PR when reopening the closed PR (**even

if the head branch has been deleted before**). Make the reference of PR

consistent with the `head branch`.

Don't remember why the previous decision that `Code` and `Release` are

non-disable units globally. Since now every unit include `Code` could be

disabled, maybe we should have a new rule that the repo should have at

least one unit. So any unit could be disabled.

Fixes#20960Fixes#7525

---------

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: yp05327 <576951401@qq.com>

Co-authored-by: @awkwardbunny

This PR adds a Debian package registry. You can follow [this

tutorial](https://www.baeldung.com/linux/create-debian-package) to build

a *.deb package for testing. Source packages are not supported at the

moment and I did not find documentation of the architecture "all" and

how these packages should be treated.

---------

Co-authored-by: Brian Hong <brian@hongs.me>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

There was only one `IsRepositoryExist` function, it did: `has && isDir`

However it's not right, and it would cause 500 error when creating a new

repository if the dir exists.

Then, it was changed to `has || isDir`, it is still incorrect, it

affects the "adopt repo" logic.

To make the logic clear:

* IsRepositoryModelOrDirExist

* IsRepositoryModelExist

Enable [forbidigo](https://github.com/ashanbrown/forbidigo) linter which

forbids print statements. Will check how to integrate this with the

smallest impact possible, so a few `nolint` comments will likely be

required. Plan is to just go through the issues and either:

- Remove the print if it is nonsensical

- Add a `//nolint` directive if it makes sense

I don't plan on investigating the individual issues any further.

<details>

<summary>Initial Lint Results</summary>

```

modules/log/event.go:348:6: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println(err)

^

modules/log/event.go:382:6: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println(err)

^

modules/queue/unique_queue_disk_channel_test.go:20:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("TempDir %s\n", tmpDir)

^

contrib/backport/backport.go:168:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* Backporting %s to %s as %s\n", pr, localReleaseBranch, backportBranch)

^

contrib/backport/backport.go:216:4: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* Navigate to %s to open PR\n", url)

^

contrib/backport/backport.go:223:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* `xdg-open %s`\n", url)

^

contrib/backport/backport.go:233:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* `git push -u %s %s`\n", remote, backportBranch)

^

contrib/backport/backport.go:243:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* Amending commit to prepend `Backport #%s` to body\n", pr)

^

contrib/backport/backport.go:272:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println("* Attempting git cherry-pick --continue")

^

contrib/backport/backport.go:281:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* Attempting git cherry-pick %s\n", sha)

^

contrib/backport/backport.go:297:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* Current branch is %s\n", currentBranch)

^

contrib/backport/backport.go:299:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* Current branch is %s - not checking out\n", currentBranch)

^

contrib/backport/backport.go:304:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* Branch %s already exists. Checking it out...\n", backportBranch)

^

contrib/backport/backport.go:308:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* `git checkout -b %s %s`\n", backportBranch, releaseBranch)

^

contrib/backport/backport.go:313:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* `git fetch %s main`\n", remote)

^

contrib/backport/backport.go:316:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println(string(out))

^

contrib/backport/backport.go:319:2: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println(string(out))

^

contrib/backport/backport.go:321:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("* `git fetch %s %s`\n", remote, releaseBranch)

^

contrib/backport/backport.go:324:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println(string(out))

^

contrib/backport/backport.go:327:2: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println(string(out))

^

models/unittest/fixtures.go:50:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println("Unsupported RDBMS for integration tests")

^

models/unittest/fixtures.go:89:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("LoadFixtures failed after retries: %v\n", err)

^

models/unittest/fixtures.go:110:4: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Failed to generate sequence update: %v\n", err)

^

models/unittest/fixtures.go:117:6: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Failed to update sequence: %s Error: %v\n", value, err)

^

models/migrations/base/tests.go:118:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println("Environment variable $GITEA_ROOT not set")

^

models/migrations/base/tests.go:127:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Could not find gitea binary at %s\n", setting.AppPath)

^

models/migrations/base/tests.go:134:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Environment variable $GITEA_CONF not set - defaulting to %s\n", giteaConf)

^

models/migrations/base/tests.go:145:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Unable to create temporary data path %v\n", err)

^

models/migrations/base/tests.go:154:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Unable to InitFull: %v\n", err)

^

models/migrations/v1_11/v112.go:34:5: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Error: %v", err)

^

contrib/fixtures/fixture_generation.go:36:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("CreateTestEngine: %+v", err)

^

contrib/fixtures/fixture_generation.go:40:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("PrepareTestDatabase: %+v\n", err)

^

contrib/fixtures/fixture_generation.go:46:5: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("generate '%s': %+v\n", r, err)

^

contrib/fixtures/fixture_generation.go:53:5: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("generate '%s': %+v\n", g.name, err)

^

contrib/fixtures/fixture_generation.go:71:4: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("%s created.\n", path)

^

services/gitdiff/gitdiff_test.go:543:2: use of `println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

println(result)

^

services/gitdiff/gitdiff_test.go:560:2: use of `println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

println(result)

^

services/gitdiff/gitdiff_test.go:577:2: use of `println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

println(result)

^

modules/web/routing/logger_manager.go:34:2: use of `print` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

print Printer

^

modules/doctor/paths.go:109:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Warning: can't remove temporary file: '%s'\n", tmpFile.Name())

^

tests/test_utils.go:33:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf(format+"\n", args...)

^

tests/test_utils.go:61:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Environment variable $GITEA_CONF not set, use default: %s\n", giteaConf)

^

cmd/actions.go:54:9: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

_, _ = fmt.Printf("%s\n", respText)

^

cmd/admin_user_change_password.go:74:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("%s's password has been successfully updated!\n", user.Name)

^

cmd/admin_user_create.go:109:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("generated random password is '%s'\n", password)

^

cmd/admin_user_create.go:164:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Access token was successfully created... %s\n", t.Token)

^

cmd/admin_user_create.go:167:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("New user '%s' has been successfully created!\n", username)

^

cmd/admin_user_generate_access_token.go:74:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("%s\n", t.Token)

^

cmd/admin_user_generate_access_token.go:76:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Access token was successfully created: %s\n", t.Token)

^

cmd/admin_user_must_change_password.go:56:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Updated %d users setting MustChangePassword to %t\n", n, mustChangePassword)

^

cmd/convert.go:44:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println("Converted successfully, please confirm your database's character set is now utf8mb4")

^

cmd/convert.go:50:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println("Converted successfully, please confirm your database's all columns character is NVARCHAR now")

^

cmd/convert.go:52:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println("This command can only be used with a MySQL or MSSQL database")

^

cmd/doctor.go:104:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println(err)

^

cmd/doctor.go:105:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println("Check if you are using the right config file. You can use a --config directive to specify one.")

^

cmd/doctor.go:243:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println(err)

^

cmd/embedded.go:154:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println(a.path)

^

cmd/embedded.go:198:3: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println("Using app.ini at", setting.CustomConf)

^

cmd/embedded.go:217:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Extracting to %s:\n", destdir)

^

cmd/embedded.go:253:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("%s already exists; skipped.\n", dest)

^

cmd/embedded.go:275:2: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println(dest)

^

cmd/generate.go:63:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("%s", internalToken)

^

cmd/generate.go:66:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("\n")

^

cmd/generate.go:78:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("%s", JWTSecretBase64)

^

cmd/generate.go:81:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("\n")

^

cmd/generate.go:93:2: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("%s", secretKey)

^

cmd/generate.go:96:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("\n")

^

cmd/keys.go:74:2: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println(strings.TrimSpace(authorizedString))

^

cmd/mailer.go:32:4: use of `fmt.Print` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Print("warning: Content is empty")

^

cmd/mailer.go:35:3: use of `fmt.Print` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Print("Proceed with sending email? [Y/n] ")

^

cmd/mailer.go:40:4: use of `fmt.Println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Println("The mail was not sent")

^

cmd/mailer.go:49:9: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

_, _ = fmt.Printf("Sent %s email(s) to all users\n", respText)

^

cmd/serv.go:147:3: use of `println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

println("Gitea: SSH has been disabled")

^

cmd/serv.go:153:4: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("error showing subcommand help: %v\n", err)

^

cmd/serv.go:175:4: use of `println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

println("Hi there! You've successfully authenticated with the deploy key named " + key.Name + ", but Gitea does not provide shell access.")

^

cmd/serv.go:177:4: use of `println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

println("Hi there! You've successfully authenticated with the principal " + key.Content + ", but Gitea does not provide shell access.")

^

cmd/serv.go:179:4: use of `println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

println("Hi there, " + user.Name + "! You've successfully authenticated with the key named " + key.Name + ", but Gitea does not provide shell access.")

^

cmd/serv.go:181:3: use of `println` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

println("If this is unexpected, please log in with password and setup Gitea under another user.")

^

cmd/serv.go:196:5: use of `fmt.Print` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Print(`{"type":"gitea","version":1}`)

^

tests/e2e/e2e_test.go:54:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Error initializing test database: %v\n", err)

^

tests/e2e/e2e_test.go:63:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("util.RemoveAll: %v\n", err)

^

tests/e2e/e2e_test.go:67:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Unable to remove repo indexer: %v\n", err)

^

tests/e2e/e2e_test.go:109:6: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("%v", stdout.String())

^

tests/e2e/e2e_test.go:110:6: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("%v", stderr.String())

^

tests/e2e/e2e_test.go:113:6: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("%v", stdout.String())

^

tests/integration/integration_test.go:124:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Error initializing test database: %v\n", err)

^

tests/integration/integration_test.go:135:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("util.RemoveAll: %v\n", err)

^

tests/integration/integration_test.go:139:3: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("Unable to remove repo indexer: %v\n", err)

^

tests/integration/repo_test.go:357:4: use of `fmt.Printf` forbidden by pattern `^(fmt\.Print(|f|ln)|print|println)$` (forbidigo)

fmt.Printf("%s", resp.Body)

^

```

</details>

---------

Co-authored-by: Giteabot <teabot@gitea.io>

If a comment dismisses a review, we need to load the reviewer to show

whose review has been dismissed.

Related to:

20b6ae0e53/templates/repo/issue/view_content/comments.tmpl (L765-L770)

We don't need `.Review.Reviewer` for all comments, because

"dismissing" doesn't happen often, or we would have already received

error reports.

Before, there was a `log/buffer.go`, but that design is not general, and

it introduces a lot of irrelevant `Content() (string, error) ` and

`return "", fmt.Errorf("not supported")` .

And the old `log/buffer.go` is difficult to use, developers have to

write a lot of `Contains` and `Sleep` code.

The new `LogChecker` is designed to be a general approach to help to

assert some messages appearing or not appearing in logs.

Close#24195

Some of the changes are taken from my another fix

f07b0de997

in #20147 (although that PR was discarded ....)

The bug is:

1. The old code doesn't handle `removedfile` event correctly

2. The old code doesn't provide attachments for type=CommentTypeReview

This PR doesn't intend to refactor the "upload" code to a perfect state

(to avoid making the review difficult), so some legacy styles are kept.

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

Close: #23738

The actual cause of `500 Internal Server Error` in the issue is not what

is descirbed in the issue.

The actual cause is that after deleting team, if there is a PR which has

requested reivew from the deleted team, the comment could not match with

the deleted team by `assgin_team_id`. So the value of `.AssigneeTeam`

(see below code block) is `nil` which cause `500 error`.

1c8bc4081a/templates/repo/issue/view_content/comments.tmpl (L691-L695)

To fix this bug, there are the following problems to be resolved:

- [x] 1. ~~Stroe the name of the team in `content` column when inserting

`comment` into DB in case that we cannot get the name of team after it

is deleted. But for comments that already exist, just display "Unknown

Team"~~ Just display "Ghost Team" in the comment if the assgined team is

deleted.

- [x] 2. Delete the PR&team binding (the row of which `review_team_id =

${team_id} ` in table `review`) when deleting team.

- [x] 3.For already exist and undeleted binding rows in in table

`review`, ~~we can delete these rows when executing migrations.~~ they

do not affect the function, so won't delete them.

This allows for usernames, and emails connected to them to be reserved

and not reused.

Use case, I manage an instance with open registration, and sometimes

when users are deleted for spam (or other purposes), their usernames are

freed up and they sign up again with the same information.

This could also be used to reserve usernames, and block them from being

registered (in case an instance would like to block certain things

without hardcoding the list in code and compiling from scratch).

This is an MVP, that will allow for future work where you can set

something as reserved via the interface.

---------

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

As promised in #23817, I have this made a PR to make Release Download

URLs predictable. It currently follows the schema

`<repo>/releases/download/<tag>/<filename>`. this already works, but it

is nowhere shown in the UI or the API. The Problem is, that it is

currently possible to have multiple files with the same name (why do we

even allow this) for a release. I had written some Code to check, if a

Release has 2 or more files with the same Name. If yes, it uses the old

`attachments/<uuid>` URlL if no it uses the new fancy URL.

I had also changed `<repo>/releases/download/<tag>/<filename>` to

directly serve the File instead of redirecting, so people who who use

automatic update checker don't end up with the `attachments/<uuid>` URL.

Fixes#10919

---------

Co-authored-by: a1012112796 <1012112796@qq.com>

Without this patch, the setting SSH.StartBuiltinServer decides whether

the native (Go) implementation is used rather than calling 'ssh-keygen'.

It's possible for 'using ssh-keygen' and 'using the built-in server' to

be independent.

In fact, the gitea rootless container doesn't ship ssh-keygen and can be

configured to use the host's SSH server - which will cause the public

key parsing mechanism to break.

This commit changes the decision to be based on SSH.KeygenPath instead.

Any existing configurations with a custom KeygenPath set will continue

to function. The new default value of '' selects the native version. The

downside of this approach is that anyone who has relying on plain

'ssh-keygen' to have special properties will now be using the native

version instead.

I assume the exec-variant is only there because /x/crypto/ssh didn't

support ssh-ed25519 until 2016. I don't see any other reason for using

it so it might be an acceptable risk.

Fixes#23363

EDIT: this message was garbled when I tried to get the commit

description back in.. Trying to reconstruct it:

## ⚠️ BREAKING ⚠️ Users who don't have SSH.KeygenPath

explicitly set and rely on the ssh-keygen binary need to set

SSH.KeygenPath to 'ssh-keygen' in order to be able to continue using it

for public key parsing.

There was something else but I can't remember at the moment.

EDIT2: It was about `make test` and `make lint`. Can't get them to run.

To reproduce the issue, I installed `golang` in `docker.io/node:16` and

got:

```

...

go: mvdan.cc/xurls/v2@v2.4.0: unknown revision mvdan.cc/xurls/v2.4.0

go: gotest.tools/v3@v3.4.0: unknown revision gotest.tools/v3.4.0

...

go: gotest.tools/v3@v3.0.3: unknown revision gotest.tools/v3.0.3

...

go: error loading module requirements

```

Signed-off-by: Leon M. Busch-George <leon@georgemail.eu>

Right now the authors search dropdown might take a long time to load if

amount of authors is huge.

Example: (In the video below, there are about 10000 authors, and it

takes about 10 seconds to open the author dropdown)

https://user-images.githubusercontent.com/17645053/229422229-98aa9656-3439-4f8c-9f4e-83bd8e2a2557.mov

Possible improvements can be made, which will take 2 steps (Thanks to

@wolfogre for advice):

Step 1:

Backend: Add a new api, which returns a limit of 30 posters with matched

prefix.

Frontend: Change the search behavior from frontend search(fomantic

search) to backend search(when input is changed, send a request to get

authors matching the current search prefix)

Step 2:

Backend: Optimize the api in step 1 using indexer to support fuzzy

search.

This PR is implements the first step. The main changes:

1. Added api: `GET /{type:issues|pulls}/posters` , which return a limit

of 30 users with matched prefix (prefix sent as query). If

`DEFAULT_SHOW_FULL_NAME` in `custom/conf/app.ini` is set to true, will

also include fullnames fuzzy search.

2. Added a tooltip saying "Shows a maximum of 30 users" to the author

search dropdown

3. Change the search behavior from frontend search to backend search

After:

https://user-images.githubusercontent.com/17645053/229430960-f88fafd8-fd5d-4f84-9df2-2677539d5d08.mov

Fixes: https://github.com/go-gitea/gitea/issues/22586

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: silverwind <me@silverwind.io>

There is no fork concept in agit flow, anyone with read permission can

push `refs/for/<target-branch>/<topic-branch>` to the repo. So we should

treat it as a fork pull request because it may be from an untrusted

user.

At first, we have one unified team unit permission which is called

`Team.Authorize` in DB.

But since https://github.com/go-gitea/gitea/pull/17811, we allowed

different units to have different permission.

The old code is only designed for the old version. So after #17811, if

org users have write permission of other units, but have no permission

of packages, they can also get write permission of packages.

Co-authored-by: delvh <dev.lh@web.de>

The old code just parses an invalid key to `TypeInvalid` and uses it as

normal, and duplicate keys will be kept.

So this PR will ignore invalid key and log warning and also deduplicate

valid units.

I neglected that the `NameKey` of `Unit` is not only for translation,

but also configuration. So it should be `repo.actions` to maintain

consistency.

## ⚠️ BREAKING ⚠️

If users already use `actions.actions` in `DISABLED_REPO_UNITS` or

`DEFAULT_REPO_UNITS`, it will be treated as an invalid unit key.

Adds API endpoints to manage issue/PR dependencies

* `GET /repos/{owner}/{repo}/issues/{index}/blocks` List issues that are

blocked by this issue

* `POST /repos/{owner}/{repo}/issues/{index}/blocks` Block the issue

given in the body by the issue in path

* `DELETE /repos/{owner}/{repo}/issues/{index}/blocks` Unblock the issue

given in the body by the issue in path

* `GET /repos/{owner}/{repo}/issues/{index}/dependencies` List an

issue's dependencies

* `POST /repos/{owner}/{repo}/issues/{index}/dependencies` Create a new

issue dependencies

* `DELETE /repos/{owner}/{repo}/issues/{index}/dependencies` Remove an

issue dependency

Closes https://github.com/go-gitea/gitea/issues/15393Closes#22115

Co-authored-by: Andrew Thornton <art27@cantab.net>

Since #23493 has conflicts with latest commits, this PR is my proposal

for fixing #23371

Details are in the comments

And refactor the `modules/options` module, to make it always use

"filepath" to access local files.

Benefits:

* No need to do `util.CleanPath(strings.ReplaceAll(p, "\\", "/"))),

"/")` any more (not only one before)

* The function behaviors are clearly defined

A part of https://github.com/go-gitea/gitea/pull/22865

At first, I think we do not need 3 ProjectTypes, as we can check user

type, but it seems that it is not database friendly.

---------

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: 6543 <6543@obermui.de>

This PR fixes the tags sort issue mentioned in #23432

The tags on dropdown shoud be sorted in descending order of time but are

not. Because when getting tags, it execeutes `git tag sort

--sort=-taggerdate`. Git supports two types of tags: lightweight and

annotated, and `git tag sort --sort=-taggerdate` dosen't work with

lightweight tags, which will not give correct result. This PR add

`GetTagNamesByRepoID ` to get tags from the database so the tags are

sorted.

Also adapt this change to the droplist when comparing branches.

Dropdown places:

<img width="369" alt="截屏2023-03-15 14 25 39"

src="https://user-images.githubusercontent.com/17645053/225224506-65a72e50-4c11-41d7-8187-a7e9c7dab2cb.png">

<img width="675" alt="截屏2023-03-15 14 25 27"

src="https://user-images.githubusercontent.com/17645053/225224526-65ce8008-340c-43f6-aa65-b6bd9e1a1bf1.png">

`namedBlob` turned out to be a poor imitation of a `TreeEntry`. Using

the latter directly shortens this code.

This partially undoes https://github.com/go-gitea/gitea/pull/23152/,

which I found a merge conflict with, and also expands the test it added

to cover the subtle README-in-a-subfolder case.

this is a simple endpoint that adds the ability to rename users to the

admin API.

Note: this is not in a mergeable state. It would be better if this was

handled by a PATCH/POST to the /api/v1/admin/users/{username} endpoint

and the username is modified.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Fixes https://github.com/go-gitea/gitea/issues/22676

Context Data `IsOrganizationMember` and `IsOrganizationOwner` is used to

control the visibility of `people` and `team` tab.

2871ea0809/templates/org/menu.tmpl (L19-L40)

And because of the reuse of user projects page, User Context is changed

to Organization Context. But the value of `IsOrganizationMember` and

`IsOrganizationOwner` are not being given.

I reused func `HandleOrgAssignment` to add them to the ctx, but may have

some unnecessary variables, idk whether it is ok.

I found there is a missing `PageIsViewProjects` at create project page.

Add test coverage to the important features of

[`routers.web.repo.renderReadmeFile`](067b0c2664/routers/web/repo/view.go (L273));

namely that:

- it can handle looking in docs/, .gitea/, and .github/

- it can handle choosing between multiple competing READMEs

- it prefers the localized README to the markdown README to the

plaintext README

- it can handle broken symlinks when processing all the options

- it uses the name of the symlink, not the name of the target of the

symlink

Replace #23350.

Refactor `setting.Database.UseMySQL` to

`setting.Database.Type.IsMySQL()`.

To avoid mismatching between `Type` and `UseXXX`.

This refactor can fix the bug mentioned in #23350, so it should be

backported.

`renderReadmeFile` needs `readmeTreelink` as parameter but gets

`treeLink`.

The values of them look like as following:

`treeLink`: `/{OwnerName}/{RepoName}/src/branch/{BranchName}`

`readmeTreelink`:

`/{OwnerName}/{RepoName}/src/branch/{BranchName}/{ReadmeFileName}`

`path.Dir` in

8540fc45b1/routers/web/repo/view.go (L316)

should convert `readmeTreelink` into

`/{OwnerName}/{RepoName}/src/branch/{BranchName}` instead of the current

`/{OwnerName}/{RepoName}/src/branch`.

Fixes#23151

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Co-authored-by: silverwind <me@silverwind.io>

Extract from #11669 and enhancement to #22585 to support exclusive

scoped labels in label templates

* Move label template functionality to label module

* Fix handling of color codes

* Add Advanced label template

When a change is pushed to the default branch and many pull requests are

open for that branch, conflict checking can take some time.

Previously it would go from oldest to newest pull request. Now

prioritize pull requests that are likely being actively worked on or

prepared for merging.

This only changes the order within one push to one repository, but the

change is trivial and can already be quite helpful for smaller Gitea

instances where a few repositories have most pull requests. A global

order would require deeper changes to queues.

The name of the job or step comes from the workflow file, while the name

of the runner comes from its registration. If the strings used for these

names are too long, they could cause db issues.

GetActiveStopwatch & HasUserStopwatch is a hot piece of code that is

repeatedly called and on examination of the cpu profile for TestGit it

represents 0.44 seconds of CPU time. This PR reduces this time to 80ms.

---------

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: delvh <leon@kske.dev>

This includes pull requests that you approved, requested changes or

commented on. Currently such pull requests are not visible in any of the

filters on /pulls, while they may need further action like merging, or

prodding the author or reviewers.

Especially when working with a large team on a repository it's helpful

to get a full overview of pull requests that may need your attention,

without having to sift through the complete list.

Unfortunately xorm's `builder.Select(...).From(...)` does not escape the

table names. This is mostly not a problem but is a problem with the

`user` table.

This PR simply escapes the user table. No other uses of `From("user")`

where found in the codebase so I think this should be all that is

needed.

Fix#23064

Signed-off-by: Andrew Thornton <art27@cantab.net>

Partially fix#23050

After #22294 merged, it always has a warning log like `cannot get

context cache` when starting up. This should not affect any real life

but it's annoying. This PR will fix the problem. That means when

starting up, getting the system settings will not try from the cache but

will read from the database directly.

---------

Co-authored-by: Lauris BH <lauris@nix.lv>

I found `FindAndCount` which can `Find` and `Count` in the same time

Maybe it is better to use it in `FindProjects`

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

minio/sha256-simd provides additional acceleration for SHA256 using

AVX512, SHA Extensions for x86 and ARM64 for ARM.

It provides a drop-in replacement for crypto/sha256 and if the

extensions are not available it falls back to standard crypto/sha256.

---------

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Ensure that issue pullrequests are loaded before trying to set the

self-reference.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <leon@kske.dev>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

The API to create tokens is missing the ability to set the required

scopes for tokens, and to show them on the API and on the UI.

This PR adds this functionality.

Signed-off-by: Andrew Thornton <art27@cantab.net>

During the recent hash algorithm change it became clear that the choice

of password hash algorithm plays a role in the time taken for CI to run.

Therefore as attempt to improve CI we should consider using a dummy

hashing algorithm instead of a real hashing algorithm.

This PR creates a dummy algorithm which is then set as the default

hashing algorithm during tests that use the fixtures. This hopefully

will cause a reduction in the time it takes for CI to run.

---------

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Some bugs caused by less unit tests in fundamental packages. This PR

refactor `setting` package so that create a unit test will be easier

than before.

- All `LoadFromXXX` files has been splited as two functions, one is

`InitProviderFromXXX` and `LoadCommonSettings`. The first functions will

only include the code to create or new a ini file. The second function

will load common settings.

- It also renames all functions in setting from `newXXXService` to

`loadXXXSetting` or `loadXXXFrom` to make the function name less

confusing.

- Move `XORMLog` to `SQLLog` because it's a better name for that.

Maybe we should finally move these `loadXXXSetting` into the `XXXInit`

function? Any idea?

---------

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: delvh <dev.lh@web.de>

This PR refactors and improves the password hashing code within gitea

and makes it possible for server administrators to set the password

hashing parameters

In addition it takes the opportunity to adjust the settings for `pbkdf2`

in order to make the hashing a little stronger.

The majority of this work was inspired by PR #14751 and I would like to

thank @boppy for their work on this.

Thanks to @gusted for the suggestion to adjust the `pbkdf2` hashing

parameters.

Close#14751

---------

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Add a new "exclusive" option per label. This makes it so that when the

label is named `scope/name`, no other label with the same `scope/`

prefix can be set on an issue.

The scope is determined by the last occurence of `/`, so for example

`scope/alpha/name` and `scope/beta/name` are considered to be in

different scopes and can coexist.

Exclusive scopes are not enforced by any database rules, however they

are enforced when editing labels at the models level, automatically

removing any existing labels in the same scope when either attaching a

new label or replacing all labels.

In menus use a circle instead of checkbox to indicate they function as

radio buttons per scope. Issue filtering by label ensures that only a

single scoped label is selected at a time. Clicking with alt key can be

used to remove a scoped label, both when editing individual issues and

batch editing.

Label rendering refactor for consistency and code simplification:

* Labels now consistently have the same shape, emojis and tooltips

everywhere. This includes the label list and label assignment menus.

* In label list, show description below label same as label menus.

* Don't use exactly black/white text colors to look a bit nicer.

* Simplify text color computation. There is no point computing luminance

in linear color space, as this is a perceptual problem and sRGB is

closer to perceptually linear.

* Increase height of label assignment menus to show more labels. Showing

only 3-4 labels at a time leads to a lot of scrolling.

* Render all labels with a new RenderLabel template helper function.

Label creation and editing in multiline modal menu:

* Change label creation to open a modal menu like label editing.

* Change menu layout to place name, description and colors on separate

lines.

* Don't color cancel button red in label editing modal menu.

* Align text to the left in model menu for better readability and

consistent with settings layout elsewhere.

Custom exclusive scoped label rendering:

* Display scoped label prefix and suffix with slightly darker and

lighter background color respectively, and a slanted edge between them

similar to the `/` symbol.

* In menus exclusive labels are grouped with a divider line.

---------

Co-authored-by: Yarden Shoham <hrsi88@gmail.com>

Co-authored-by: Lauris BH <lauris@nix.lv>

Unfortunately #20896 does not completely prevent Data too long issues

and GPGKeyImport needs to be increased too.

Fix#22896

Signed-off-by: Andrew Thornton <art27@cantab.net>

Allow back-dating user creation via the `adminCreateUser` API operation.

`CreateUserOption` now has an optional field `created_at`, which can

contain a datetime-formatted string. If this field is present, the

user's `created_unix` database field will be updated to its value.

This is important for Blender's migration of users from Phabricator to

Gitea. There are many users, and the creation timestamp of their account

can give us some indication as to how long someone's been part of the

community.

The back-dating is done in a separate query that just updates the user's

`created_unix` field. This was the easiest and cleanest way I could

find, as in the initial `INSERT` query the field always is set to "now".

Fix#22797.

## Reason

If a comment was migrated from other platforms, this comment may have an

original author and its poster is always not the original author. When

the `roleDescriptor` func get the poster's role descriptor for a

comment, it does not check if the comment has an original author. So the

migrated comments' original authors might be marked as incorrect roles.

---------

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

To avoid duplicated load of the same data in an HTTP request, we can set

a context cache to do that. i.e. Some pages may load a user from a

database with the same id in different areas on the same page. But the

code is hidden in two different deep logic. How should we share the

user? As a result of this PR, now if both entry functions accept

`context.Context` as the first parameter and we just need to refactor

`GetUserByID` to reuse the user from the context cache. Then it will not

be loaded twice on an HTTP request.

But of course, sometimes we would like to reload an object from the

database, that's why `RemoveContextData` is also exposed.

The core context cache is here. It defines a new context

```go

type cacheContext struct {

ctx context.Context

data map[any]map[any]any

lock sync.RWMutex

}

var cacheContextKey = struct{}{}

func WithCacheContext(ctx context.Context) context.Context {

return context.WithValue(ctx, cacheContextKey, &cacheContext{

ctx: ctx,

data: make(map[any]map[any]any),

})

}

```

Then you can use the below 4 methods to read/write/del the data within

the same context.

```go

func GetContextData(ctx context.Context, tp, key any) any

func SetContextData(ctx context.Context, tp, key, value any)

func RemoveContextData(ctx context.Context, tp, key any)

func GetWithContextCache[T any](ctx context.Context, cacheGroupKey string, cacheTargetID any, f func() (T, error)) (T, error)

```

Then let's take a look at how `system.GetString` implement it.

```go

func GetSetting(ctx context.Context, key string) (string, error) {

return cache.GetWithContextCache(ctx, contextCacheKey, key, func() (string, error) {

return cache.GetString(genSettingCacheKey(key), func() (string, error) {

res, err := GetSettingNoCache(ctx, key)

if err != nil {

return "", err

}

return res.SettingValue, nil

})

})

}

```

First, it will check if context data include the setting object with the

key. If not, it will query from the global cache which may be memory or

a Redis cache. If not, it will get the object from the database. In the

end, if the object gets from the global cache or database, it will be

set into the context cache.

An object stored in the context cache will only be destroyed after the

context disappeared.

As part of administration sometimes it is appropriate to forcibly tell

users to update their passwords.

This PR creates a new command `gitea admin user must-change-password`

which will set the `MustChangePassword` flag on the provided users.

Signed-off-by: Andrew Thornton <art27@cantab.net>

As discussed in #22847 the helpers in helpers.less need to have a

separate prefix as they are causing conflicts with fomantic styles

This will allow us to have the `.gt-hidden { display:none !important; }`

style that is needed to for the reverted PR.

Of note in doing this I have noticed that there was already a conflict

with at least one chroma style which this PR now avoids.

I've also added in the `gt-hidden` style that matches the tailwind one

and switched the code that needed it to use that.

Signed-off-by: Andrew Thornton <art27@cantab.net>

---------

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Add setting to allow edits by maintainers by default, to avoid having to

often ask contributors to enable this.

This also reorganizes the pull request settings UI to improve clarity.

It was unclear which checkbox options were there to control available

merge styles and which merge styles they correspond to.

Now there is a "Merge Styles" label followed by the merge style options

with the same name as in other menus. The remaining checkboxes were

moved to the bottom, ordered rougly by typical order of operations.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

When we updated the .golangci.yml for 1.20 we should have used a string

as 1.20 is not a valid number.

In doing so we need to restore the nolint markings within the pq driver.

Signed-off-by: Andrew Thornton <art27@cantab.net>

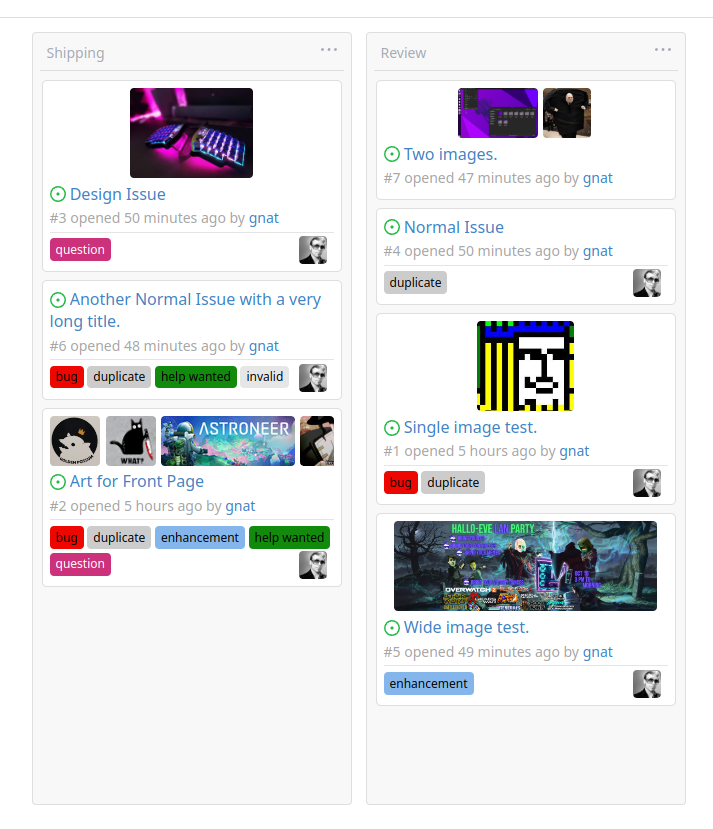

Original Issue: https://github.com/go-gitea/gitea/issues/22102

This addition would be a big benefit for design and art teams using the

issue tracking.

The preview will be the latest "image type" attachments on an issue-

simple, and allows for automatic updates of the cover image as issue

progress is made!

This would make Gitea competitive with Trello... wouldn't it be amazing

to say goodbye to Atlassian products? Ha.

First image is the most recent, the SQL will fetch up to 5 latest images

(URL string).

All images supported by browsers plus upcoming formats: *.avif *.bmp

*.gif *.jpg *.jpeg *.jxl *.png *.svg *.webp

The CSS will try to center-align images until it cannot, then it will

left align with overflow hidden. Single images get to be slightly

larger!

Tested so far on: Chrome, Firefox, Android Chrome, Android Firefox.

Current revision with light and dark themes:

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: delvh <dev.lh@web.de>

In Go code, HTMLURL should be only used for external systems, like

API/webhook/mail/notification, etc.

If a URL is used by `Redirect` or rendered in a template, it should be a

relative URL (aka `Link()` in Gitea)

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

I haven't tested `runs_list.tmpl` but I think it could be right.

After this PR, besides the `<meta .. HTMLURL>` in html head, the only

explicit HTMLURL usage is in `pull_merge_instruction.tmpl`, which

doesn't affect users too much and it's difficult to fix at the moment.

There are still many usages of `AppUrl` in the templates (eg: the

package help manual), they are similar problems as the HTMLURL in

pull_merge_instruction, and they might be fixed together in the future.

Diff without space:

https://github.com/go-gitea/gitea/pull/22831/files?diff=unified&w=1

Fixes#19555

Test-Instructions:

https://github.com/go-gitea/gitea/pull/21441#issuecomment-1419438000

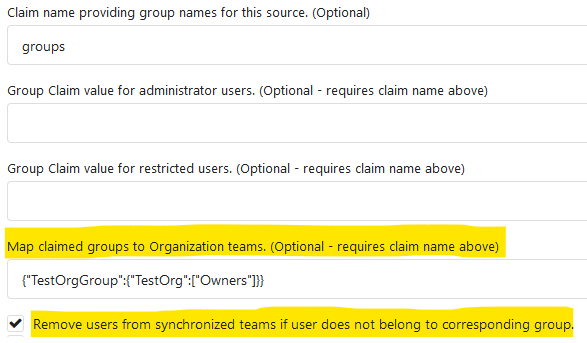

This PR implements the mapping of user groups provided by OIDC providers

to orgs teams in Gitea. The main part is a refactoring of the existing

LDAP code to make it usable from different providers.

Refactorings:

- Moved the router auth code from module to service because of import

cycles

- Changed some model methods to take a `Context` parameter

- Moved the mapping code from LDAP to a common location

I've tested it with Keycloak but other providers should work too. The

JSON mapping format is the same as for LDAP.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

partially fix#19345

This PR add some `Link` methods for different objects. The `Link`

methods are not different from `HTMLURL`, they are lack of the absolute

URL. And most of UI `HTMLURL` have been replaced to `Link` so that users

can visit them from a different domain or IP.

This PR also introduces a new javascript configuration

`window.config.reqAppUrl` which is different from `appUrl` which is

still an absolute url but the domain has been replaced to the current

requested domain.

Should call `PushToBaseRepo` before

`notification.NotifyPullRequestSynchronized`.

Or the notifier will get an old commit when reading branch

`pull/xxx/head`.

Found by ~#21937~ #22679.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

This PR fixes two problems. One is when filter repository issues, only

repository level projects are listed. Another is if you list open

issues, only open projects will be displayed in filter options and if

you list closed issues, only closed projects will be displayed in filter

options.

In this PR, both repository level and org/user level projects will be

displayed in filter, and both open and closed projects will be listed as

filter items.

---------

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

Every user can already disable the filter manually, so the explicit

setting is absolutely useless and only complicates the logic.

Previously, there was also unexpected behavior when multiple query

parameters were present.

---------

Co-authored-by: zeripath <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Most of the time forks are used for contributing code only, so not

having

issues, projects, release and packages is a better default for such

cases.

They can still be enabled in the settings.

A new option `DEFAULT_FORK_REPO_UNITS` is added to configure the default

units on forks.

Also add missing `repo.packages` unit to documentation.

code by: @brechtvl

## ⚠️ BREAKING ⚠️

When forking a repository, the fork will now have issues, projects,

releases, packages and wiki disabled. These can be enabled in the

repository settings afterwards. To change back to the previous default

behavior, configure `DEFAULT_FORK_REPO_UNITS` to be the same value as

`DEFAULT_REPO_UNITS`.

Co-authored-by: Brecht Van Lommel <brecht@blender.org>

Our trace logging is far from perfect and is difficult to follow.

This PR:

* Add trace logging for process manager add and remove.

* Fixes an errant read file for git refs in getMergeCommit

* Brings in the pullrequest `String` and `ColorFormat` methods

introduced in #22568

* Adds a lot more logging in to testPR etc.

Ref #22578

---------

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Fix#21994.

And fix#19470.

While generating new repo from a template, it does something like

"commit to git repo, re-fetch repo model from DB, and update default

branch if it's empty".

19d5b2f922/modules/repository/generate.go (L241-L253)

Unfortunately, when load repo from DB, the default branch will be set to

`setting.Repository.DefaultBranch` if it's empty:

19d5b2f922/models/repo/repo.go (L228-L233)

I believe it's a very old temporary patch but has been kept for many

years, see:

[2d2d85bb](https://github.com/go-gitea/gitea/commit/2d2d85bb#diff-1851799b06733db4df3ec74385c1e8850ee5aedee70b8b55366910d22725eea8)

I know it's a risk to delete it, may lead to potential behavioral

changes, but we cannot keep the outdated `FIXME` forever. On the other

hand, an empty `DefaultBranch` does make sense: an empty repo doesn't

have one conceptually (actually, Gitea will still set it to

`setting.Repository.DefaultBranch` to make it safer).

The error reported when a user passes a private ssh key as their ssh

public key is not very nice.

This PR improves this slightly.

Ref #22693

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: delvh <dev.lh@web.de>

- Currently the function `GetUsersWhoCanCreateOrgRepo` uses a query that

is able to have duplicated users in the result, this is can happen under

the condition that a user is in team that either is the owner team or

has permission to create organization repositories.

- Add test code to simulate the above condition for user 3,

[`TestGetUsersWhoCanCreateOrgRepo`](a1fcb1cfb8/models/organization/org_test.go (L435))

is the test function that tests for this.

- The fix is quite trivial use a map keyed by user id in order to drop

duplicates.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Currently only a single project like milestone, not multiple like

labels.

Implements #14298

Code by @brechtvl

---------

Co-authored-by: Brecht Van Lommel <brecht@blender.org>

Instead of re-creating, these should use the available `Link` methods

from the "parent" of the project, which also take sub-urls into account.

Signed-off-by: jolheiser <john.olheiser@gmail.com>

This commit adds support for specifying comment types when importing

with `gitea restore-repo`. It makes it possible to import issue changes,

such as "title changed" or "assigned user changed".

An earlier version of this pull request was made by Matti Ranta, in

https://future.projects.blender.org/blender-migration/gitea-bf/pulls/3

There are two changes with regard to Matti's original code:

1. The comment type was an `int64` in Matti's code, and is now using a

string. This makes it possible to use `comment_type: title`, which is

more reliable and future-proof than an index into an internal list in

the Gitea Go code.

2. Matti's code also had support for including labels, but in a way that

would require knowing the database ID of the labels before the import

even starts, which is impossible. This can be solved by using label

names instead of IDs; for simplicity I I left that out of this PR.

When importing a repository via `gitea restore-repo`, external users

will get remapped to an admin user. This admin user is obtained via

`users.GetAdminUser()`, which unfortunately picks a more-or-less random

admin to return.

This makes it hard to predict which admin user will get assigned. This

patch orders the admin by ascending ID before choosing the first one,

i.e. it picks the admin with the lowest ID.

Even though it would be nicer to have full control over which user is

chosen, this at least gives us a predictable result.

This PR adds the support for scopes of access tokens, mimicking the

design of GitHub OAuth scopes.

The changes of the core logic are in `models/auth` that `AccessToken`

struct will have a `Scope` field. The normalized (no duplication of

scope), comma-separated scope string will be stored in `access_token`

table in the database.

In `services/auth`, the scope will be stored in context, which will be

used by `reqToken` middleware in API calls. Only OAuth2 tokens will have

granular token scopes, while others like BasicAuth will default to scope

`all`.

A large amount of work happens in `routers/api/v1/api.go` and the

corresponding `tests/integration` tests, that is adding necessary scopes

to each of the API calls as they fit.

- [x] Add `Scope` field to `AccessToken`

- [x] Add access control to all API endpoints